Computer modeling is essential for understanding how the brain creates and retains complex information, such as memories. But developing such models is a challenging task.

A symphony of biochemical and electrical signals and a tangle of connections between neurons and other cell types create the hardware for memories to take hold. However, putting the process into a computer model to analyze it further has proven difficult because neuroscientists are still learning about the biology of the brain.

The Hopfield network, a popular computer model of memory, has now been modified by Okinawa Institute of Science and Technology (OIST) researchers in a way that enhances performance by drawing inspiration from biology. Scientists discovered that the new network could contain significantly more memories and reflect the wiring of neurons and other brain cells more accurately.

Hopfield networks use patterns of weighted connections between several neurons to retain memories. Researchers can test the network’s recollection of these patterns by providing it with a series of hazy or incomplete patterns and seeing if it can identify them as one it already knows. The network is “trained” to encode these patterns.

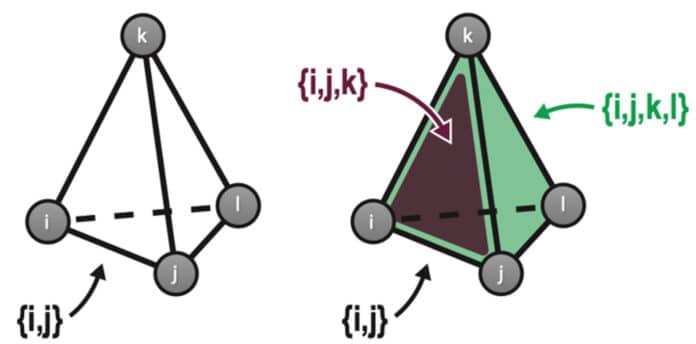

Yet, in classical Hopfield networks, neurons in the model make a sequence of “pairwise” connections with other neurons. Pairwise connections simulate the interaction of two brain neurons at a synapse, where they connect. The brain relies on a far more complicated network of synapses to carry out cognitive functions because neurons contain complex branches called dendrites that offer several locations for connection. Astrocytes, a different cell class, also influence the connections between neurons.

Thomas Burns, a Ph.D. student in the group of Professor Tomoki Fukai, who heads OIST’s Neural Coding and Brain Computing Unit, said, “It’s simply not realistic that only pairwise connections between neurons exist in the brain.”

Mr. Burns created a modified Hopfield network in which not just pairs of neurons but sets of three, four, or more neurons could link up too, such as might occur in the brain through astrocytes and dendritic trees.

Although allowing these so-called “set-wise” connections, the new network nonetheless had the same number of connections overall as the old one. The team discovered that a network with a blend of pairwise and set-wise connections functioned optimally and maintained the most memories. They predict that it performs at least twice as well as a conventional Hopfield network.

Mr. Burns said, “You need a combination of features in some balance. You should have individual synapses, but you should also have some dendritic trees and some astrocytes.”

The team is planning to continue working with their modified Hopfield networks to make them still more powerful. Also, Mr. Burns would like to explore ways of making the network’s memories interact with each other the way they do in the human brain.

Mr. Burns said, “Our memories are multifaceted and vast. We still have a lot to uncover.”

Journal Reference:

- Thomas F Burns, Tomoki Fukai. Simplicial Hopfield networks. ICLR 2023 poster. Paper link.