While we heard a lot about a robot pizza delivery, never mind if an autonomous machine will cook your pizza as well. Good cooking needs a lot of patience, time, practice, and skill. So, is it possible for a robot or a machine to do what professional chefs take years to be perfect

Massachusetts Institute of Technology (MIT) researchers are trying to make it possible. They have developed a neural network that learns how to make pizza using pictures. The newest neural network, PizzaGAN is made by the researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and the Qatar Computing Research Institute (QCRI).

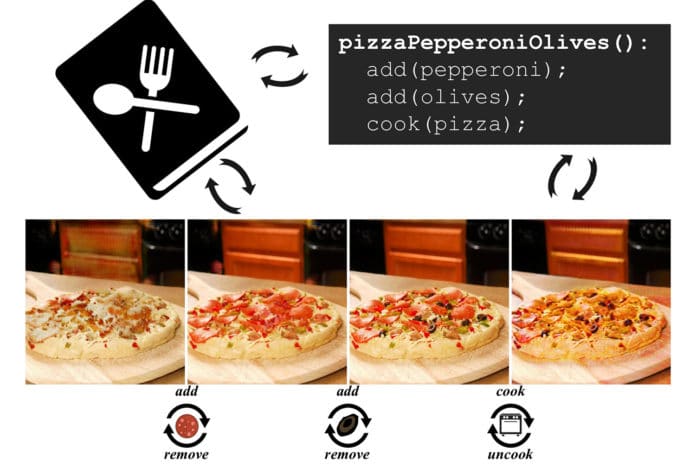

The project is aimed to teach a machine how to make a pizza by building a generative model that mirrors this step-by-step procedure.

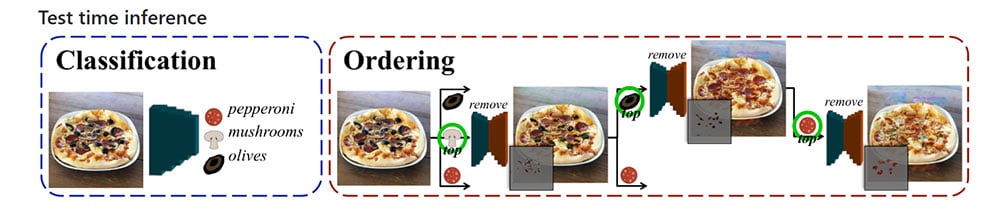

The neural network can look at an image of a pizza, determine the type and distribution of ingredients, and figure out the correct order to layer the pizza before cooking. It understands what makes a pizza should look like from start to finish.

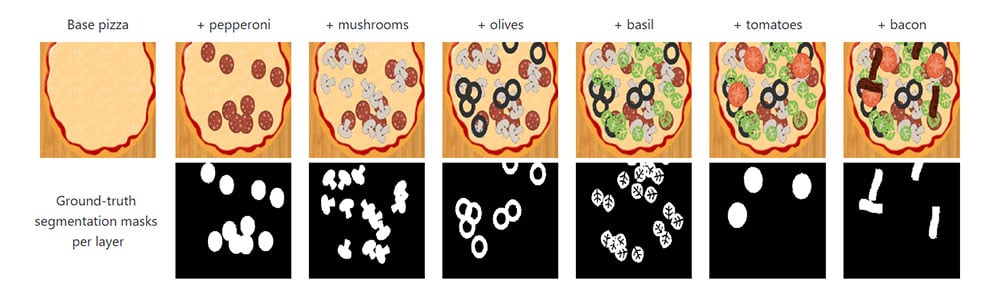

As the pizza is made of layers, researchers have decided to teach machines about how to recognize different steps in cooking by splitting up the image of pizza for different individual ingredients. A plane pizza looks one way, while adding toppings and ingredients would visually, change the overall appearance. So, it is essential for the engineers to fed the neural network with images having the correct sequence of steps.

To do that, researchers first created the dataset composed of roughly 5,500 pizza images – all were synthetic and created in a clipart style. Then the team fed the machine with an additional 9,213 real pizza images collected from the web.

Besides, 12 toppings were also added to the dataset, which includes pictures of arugula, bacon, broccoli, corn, basil, mushrooms, and olives.

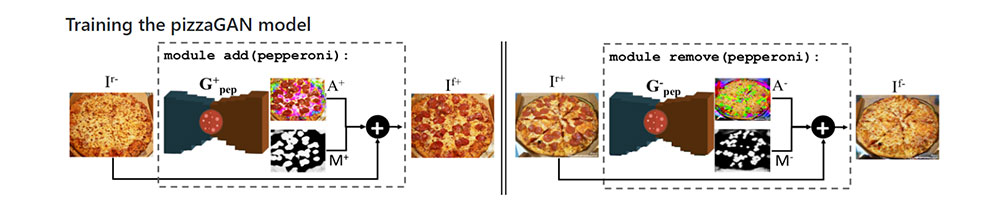

The PizzaGAN code first trains the machine how to add and remove individual ingredients, and then create a synthesized image. It then detects the toppings that appear and then predicts the order the toppings appear in the cooking process by calculating depth.

From there, PizzaGAN was then able to predict and create a step-by-step guide to make a pizza using an image of a single input pizza.

The result of the system was pretty accurate. In their paper, the MIT researchers noted that it’s better at ordering synthetic pizza images than real ones. The scientists claimed there that the method can predict the correct ordering 88% of the times.

“Though we have evaluated our model only in the context of pizza, we believe that a similar approach is promising for other types of foods that are naturally layered such as burgers, sandwiches, and salads. Beyond food, it will be interesting to see how our model performs on domains such as digital fashion shopping assistants, where a key operation is the virtual combination of different layers of clothes,” the team wrote in the paper.

Well, I know what you must be imagining now. But no, it doesn’t actually make a pizza that you can eat, at least, not yet. It would take a while to teach robots to prepare and cook pizza all on their own. But this new research could be a step towards this future and also be useful for burgers and other layered dishes.