People with neurological conditions who lose the ability to communicate, typically due to brain damage, stroke or any other medical conditions now have a hope of regaining a voice. All thanks to the new technology that harnesses brain activity to produce synthesized speech.

Scientists at the University of California, San Francisco created a brain-machine interface that can transform brain signals into speech ( to control a virtual vocal track including the lips, jaw, tongue, and larynx).

The device doesn’t try to read the thoughts, it uses machine learning technology to pick up on individual nerve commands. Then it translated those into a virtual vocal tract that approximates the considered output.

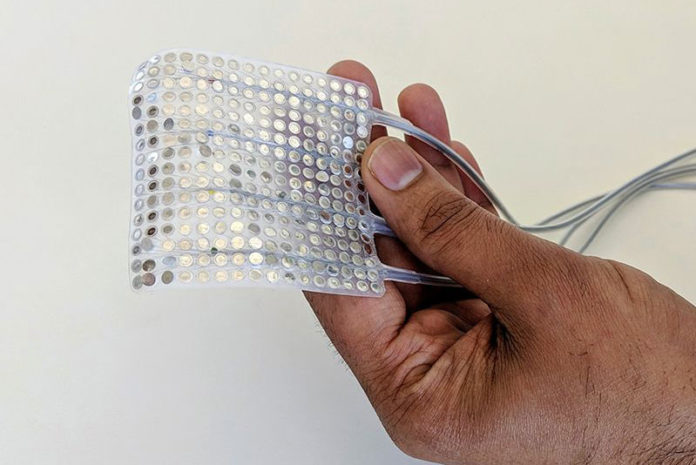

The research led by neurosurgeon Edward Chang at the University of California, San Francisco where the team worked with the five people who had implanted electrodes called an electrocorticography array, or ECoG, that implanted on the brain’s surface as a part of epilepsy treatment.

They first recorded brain activity as the person read hundreds of sentences loudly. Then Chang and his team combined the recorded data from previous experiments that determined how movements of the tongue, lips, jaw, and larynx created sound.

After that, the team trained a deep-learning algorithm on these data and then included the program into their decoder. The device transforms brain signals into synthetic speech by estimating the vocal tract. People who listened to 101 synthesized sentences could understand 70% of the words on average, Chang says.

In another experiment, the researchers asked one participant to read sentences aloud and then to mime the same sentences by moving their mouth without producing sound. The sentences synthesized in this test were of lower quality than those created from audible speech, Chang says, but the results are still encouraging.

However, this technology is not yet accurate enough for use outside the lab, even if it can synthesize the sentences that are mostly intelligible.

“We are tapping into the parts of the brain that control these movements—we are trying to decode movements, rather than speaking directly,” says Chang.

Marc Slutzky, a neurologist at Northwestern University in Chicago says, “The study is a really important step, but there’s still a long way to go before synthesized speech is easily intelligible.”