A neuron (also called nerve cell) is an electrically sensitive cell that takes up, forms and transmits data through electrical and concoction signals. It is one of the essential components of the nervous system.

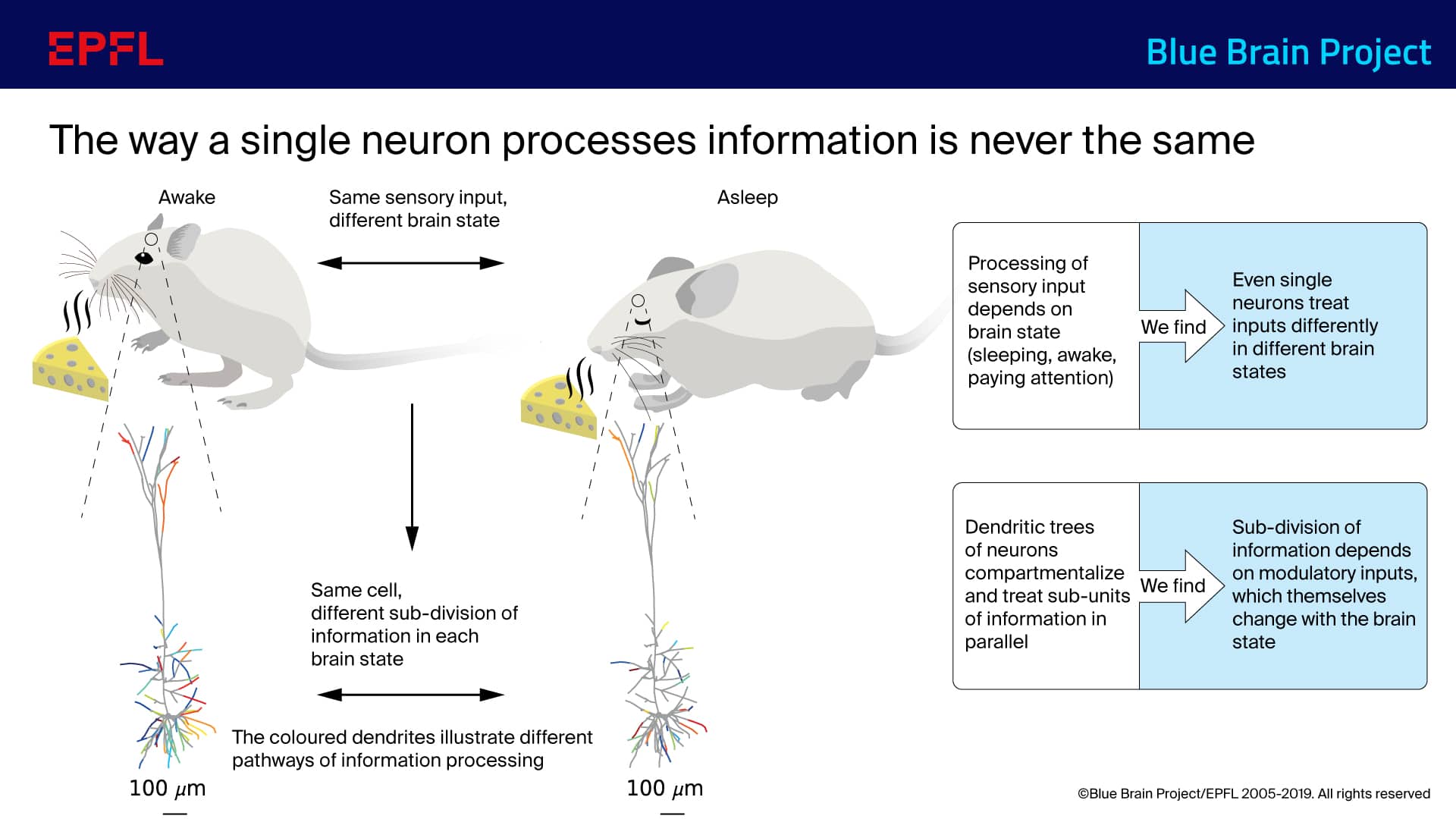

The dendritic tree of neurons assumes a significant role in information processing in the brain. While it is believed that dendrites require independent subunits to perform the more substantial part of their calculations, it is as yet not seen how they compartmentalize into functional subunits.

In a new study by the scientists at the EPFL’s Blue Brain Project, a Swiss Brain Research Initiative, show how these subunits can be deduced from the properties of dendrites. They have come up with a new framework to work out how a single neuron in the brain operates.

The investigation was performed utilizing cells from the Blue Brain’s virtual rodent cortex. Scientists anticipate different sorts of neurons – non-cortical or human – to work similarly.

Their outcomes demonstrate that when a neuron gets input, the parts of the specific tree-like receptors reaching out from the neuron, known as dendrites, practically cooperate in a way that is adjusted by the complexity of the input.

The strength of a synapse decides how emphatically a neuron feels an electric sign originating from different neurons, and the demonstration of learning changes this strength.

By investigating the connectivity matrix that decides how these synapses connections speak with one another, the algorithm sets up when and where synapses bunch into autonomous learning units from the structural and electrical properties of dendrites.

The new algorithm decides how the dendrites of neurons functionally separate into discrete registering units and find that they cooperate dynamically, contingent upon the remaining task at hand, to process data.

The scientists liken their outcomes to the working of figuring innovation effectively actualized today. This recently watched dendritic usefulness acts like parallel computing units implying that a neuron can process various parts of the contribution to parallel, similar to supercomputers.

Every one of the parallel computing units can autonomously figure out how to alter its output, much like the nodes in deep learning systems utilized in AI models today. As compared to cloud computing, a neuron powerfully separates into the number of separate computing units requested by the workload of the input.

Marc-Oliver Gewaltig, Section Manager in Blue Brain’s Simulation Neuroscience Division, said, “In the Blue Brain Project, this mathematical approach helps to ascertain functionally relevant clusters of neuronal input which are inputs that feed into the same parallel processing unit. This then enables us to determine the level of complexity at which to model cortical networks as we digitally reconstruct and simulate the brain.”

The study also reveals how these parallel processing units influence learning, i.e., the change in connection strength between different neurons. How a neuron learns relies upon the number and location of parallel processors, which thus rely upon the sign landing from different neurons. For example, specific synapses that don’t adapt autonomously when the neuron’s information level is low, begin to adapt freely when the information levels are higher.

Lead scientist and first author Willem Wybo said, “The method finds that in many brain states, neurons have far fewer parallel processors than expected from dendritic branch patterns. Thus, many synapses appear to be in “grey zones” where they do not belong to any processing unit. However, in the brain, neurons receive varying levels of background input, and our results show that the number of parallel processors varies with the level of background input, indicating that the same neuron might have different computational roles in different brain states.”

“We are particularly excited about this observation since it sheds new light on the role of up/down states in the brain, and it also provides a reason as to why cortical inhibition is so location-specific. With the new insights, we can start looking for algorithms that exploit the rapid changes in pairing between processing units, offering us more insight into the fundamental question of how the brain computes.”

The study is published in the journal Cell Reports.