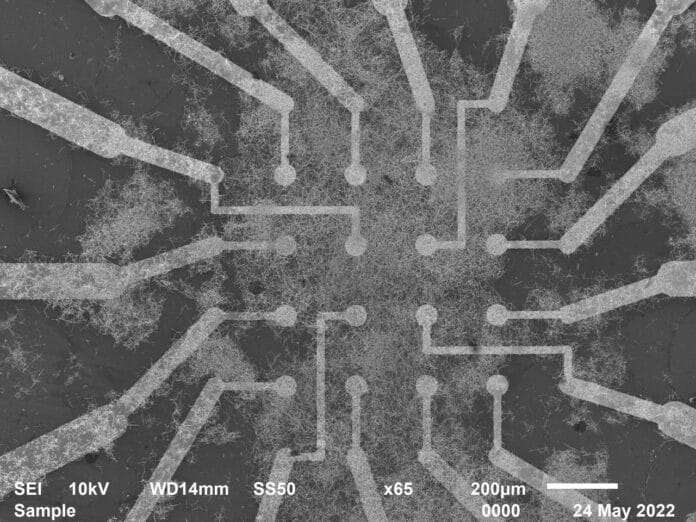

Tiny wires with a diameter of only billionths a meter make up nanowire networks. The wires resemble neural networks, similar to those in our brains, and arrange themselves into patterns reminiscent of the kid’s game “Pick Up Sticks.” There are specialized information processing activities that can be carried out using these networks.

To accomplish memory and learning tasks, basic algorithms react to variations in electrical resistance at the points where nanowires intersect. This feature, called “resistive memory switching,” is produced when electrical inputs experience conductivity changes, like synapses in the brain.

For the first time, a physical neural network has successfully been shown to learn and remember ‘on the fly’ in a way inspired by and similar to how the brain’s neurons work. The research, by scientists at the University of Sydney and the University of California at Los Angeles, could lead to the development of efficient and low-energy machine intelligence for more complex, real-world learning and memory tasks.

Scientists used the network’s ability to identify and recall sets of electrical pulses that correlate to images; this system was inspired by how the human brain processes information.

Supervising researcher Professor Zdenka Kuncic said, “The memory task was similar to remembering a phone number. The network was also used to perform a benchmark image recognition task, accessing images in the MNIST database of handwritten digits, a collection of 70,000 small greyscale images used in machine learning.”

“Our previous research established the ability of nanowire networks to remember simple tasks. This work has extended these findings by showing tasks can be performed using dynamic data accessed online.”

This is a significant advancement because it can be difficult to achieve online learning capabilities when working with vast amounts of data that may change often. A standard method would be to store information in memory and use that memory to train a machine-learning model. However, this would require too much energy to be used widely.

With a 93.4 percent accuracy rate in accurately identifying test images, the nanowire neural network demonstrated a benchmark machine learning performance. Recalling up to eight-digit sequences was required for the memory task. Data was fed into the grid for both tasks to show how memory improves online learning and to show the network’s capability for online learning.

Journal Reference:

- Zhu, Lilak, Loeffler, et al. Online dynamical learning and sequence memory with neuromorphic nanowire networks. Nature Communications. DOI: 10.1038/s41467-023-42470-5