Science writers are sometimes known as scientific journalists. They report on scientific news for the media, sometimes taking on a more investigatory, critical role. Before writing the content, they primarily have to figure out a way to explain the content so that a user with a non-scientific background can understand.

Now, MIT scientists and elsewhere have developed a neural network to help science writer, at least to a limited extent. The AI can read scientific papers and render a plain-English summary in a sentence or two.

Not only in language processing, but the approach can also be used in machine translation and speech recognition. Scientists were actually demonstrating a way of using AI to tackle certain thorny problems in physics. But they later realized that the same approach could be used to address other difficult computational problems, including natural language processing, in ways that might outperform existing neural network systems.

Soljačić said, “We have been doing various kinds of work in AI for a few years now. We use AI to help with our research, basically to do physics better. And as we got to be more familiar with AI, we would notice that every once in a while there is an opportunity to add to the field of AI because of something that we know from physics — a certain mathematical construct or a certain law in physics. We noticed that hey if we use that, it could actually help with this or that particular AI algorithm.”

“This approach could be useful in a variety of specific kinds of tasks, but not all. We can’t say this is useful for all of AI, but there are instances where we can use insight from physics to improve on a given AI algorithm.”

Generally, Neurals networks have difficulty in correlating information from a long string of data, such as is required in interpreting a research paper. Various tricks have been used to improve this capability, including techniques, are known as long short-term memory (LSTM) and gated recurrent units (GRU), but these still fall well short of what’s needed for real natural-language processing, the researchers say.

The team came up with an alternative system, which instead of being based on the multiplication of matrices, as most conventional neural networks are, is based on vectors rotating in a multidimensional space. The key concept is something they call a rotational unit of memory (RUM).

And as expected, the system generates an output representing each word in the text by a vector in multidimensional space — a line of a certain length pointing in a particular direction. Each subsequent word swings this vector in some direction, represented in a theoretical space that can ultimately have thousands of dimensions. At the end of the process, the final vector or set of vectors is translated back into its corresponding string of words.

Nakov said, “RUM helps neural networks to do two things very well. It helps them to remember better, and it enables them to recall information more accurately.”

Marin Soljačić, a professor of physics at MIT said, “After developing the RUM system to help with certain tough physics problems such as the behavior of light in complex engineered materials, we realized one of the places where we thought this approach could be useful would be natural language processing.”

He further noted, “such a tool would be useful for his work as an editor trying to decide which papers to write about. Tatalović was at the time exploring AI in science journalism as his Knight fellowship project.”

“And so we tried a few natural language processing tasks on it. One that we tried was summarizing articles, and that seems to be working quite well.”

the RUM-based system has been expanded so it can “read” through entire research papers, not just the abstracts, to produce a summary of their contents. The researchers have even tried using the system on their own research paper describing these findings — the paper that this news story is attempting to summarize.

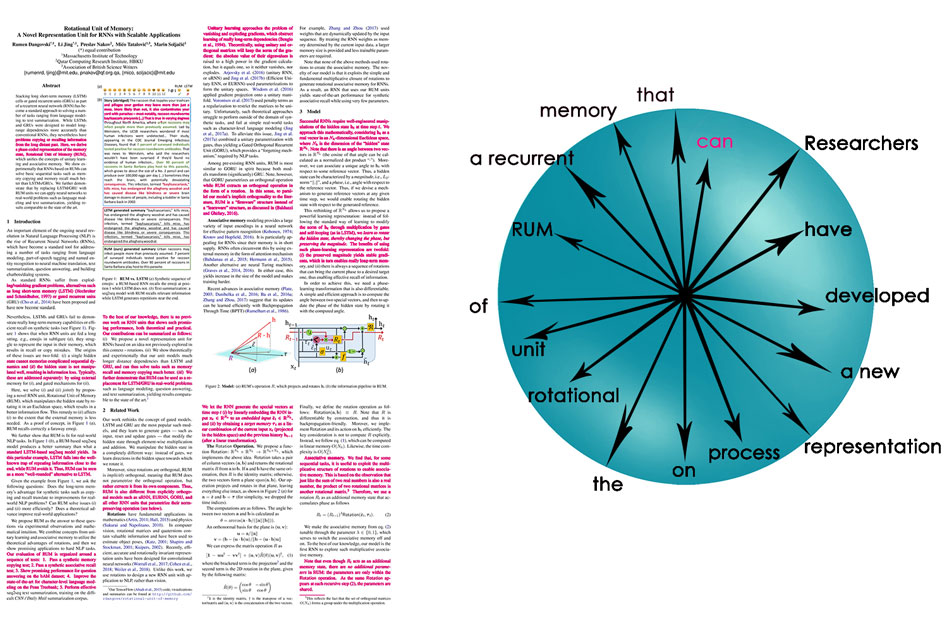

Here is the new neural network’s summary: Researchers have developed a new representation process on the rotational unit of RUM, a recurrent memory that can be used to solve a broad spectrum of the neural revolution in natural language processing.

It may not be elegant prose, but it does at least hit the key points of information.

Çağlar Gülçehre, a research scientist at the British AI company Deepmind Technologies, who was not involved in this work, says this research tackles an important problem in neural networks, having to do with relating pieces of information that are widely separated in time or space.

He said, “This problem has been a very fundamental issue in AI due to the necessity to do reasoning over long time-delays in sequence-prediction tasks. Although I do not think this paper completely solves this problem, it shows promising results on the long-term dependency tasks such as question-answering, text summarization, and associative recall.”

Gülçehre adds, “Since the experiments conducted and the model proposed in this paper is released as open-source on Github, as a result, many researchers will be interested in trying it on their own tasks. … To be more specific, potentially the approach proposed in this paper can have a very high impact on the fields of natural language processing and reinforcement learning, where the long-term dependencies are very crucial.”

The research received support from the Army Research Office, the National Science Foundation, the MIT-SenseTime Alliance on Artificial Intelligence, and the Semiconductor Research Corporation. The team also had help from the Science Daily website, whose articles were used in training some of the AI models in this research.

The work is described in the journal Transactions of the Association for Computational Linguistics.