Understanding web traffic patterns at such a huge scale helps inform internet policy, distinguish and prevent outages, guarding against cyberattacks, and designing more efficient computing infrastructure.

A similar approach has been designed by MIT scientists in partnership with the Widely Integrated Distributed Environment (WIDE) project, founded by several Japanese universities, and the Center for Applied Internet Data Analysis (CAIDA), in California, using a supercomputing system. Scientists have developed a model that captures what web traffic looks like around the world on a given day. Scientists think that the model can be used as a measurement tool for internet research and many other applications.

By taking a massive network dataset, the model can generate statistical measurements about how all connections in the network affect each other. What’s more, the measures can help scientists determine insights about peer-to-peer filesharing, nefarious IP addresses, and spamming behavior, the distribution of attacks in critical sectors, and traffic bottlenecks to better allocate computing resources and keep data flowing.

Jeremy Kepner, a researcher at the MIT Lincoln Laboratory Supercomputing Center, said, “We built an accurate model for measuring the background of the virtual universe of the Internet. If you want to detect any variance or anomalies, you have to have a good model of the background.”

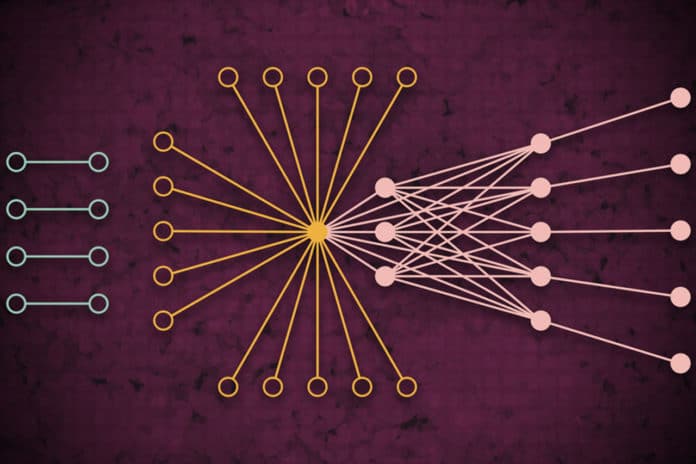

To develop this model, scientists primarily gathered the largest publicly available internet traffic dataset, which includes 50 billion data packets exchanged in different locations across the globe over a period of several years. Then they sent the data through a unique kind of neural network pipeline operating across 10,000 processors of the MIT SuperCloud. That pipeline automatically trained a model that captures the relationship for all links in the dataset — from common pings to giants like Google and Facebook, to rare links that only briefly connect yet seem to have some impact on web traffic.

Before training any model on the data, scientists were required to do much more research. For this, they used a software named Dynamic Distributed Dimensional Data Model (D4M), that uses some averaging techniques to efficiently compute and sort “hypersparse data” that contains far more space than data points.

The data was then broken into units with 100,000 packets across 10,000 MIT SuperCloud processors. This generated more compact matrices of billions of rows and columns of interactions between sources and destinations.

However, the vast majority of cells in this hypersparse dataset were still empty. To process the matrices, the team ran a neural network on the same 10,000 cores. Behind the scenes, a trial-and-error technique started fitting models to the entirety of the data, creating a probability distribution of potentially accurate models.

Scientists then ensured that the model could capture a massive amount of data using a modified error-correction technique to refine the parameters of each model further.

Kepner said, “In the end, the neural network essentially generates a simple model, with only two parameters, that describes the internet traffic dataset, from really popular nodes to isolated nodes, and the complete spectrum of everything in between.”

A paper describing the approach was presented at the recent IEEE High-Performance Extreme Computing Conference.