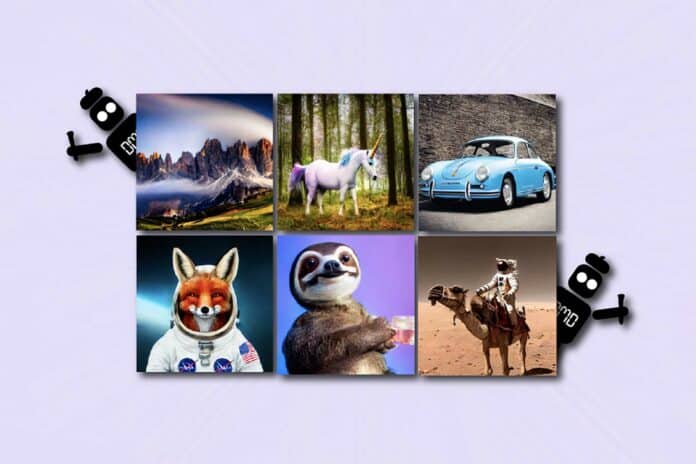

Diffusion models generate high-quality images but require dozens of forward passes. MIT scientists have created a one-step AI image generator that simplifies the multiple processes of traditional diffusion models into a single step.

In a single step, it generates images 30 times faster. This is accomplished by training a new computer model to imitate the actions of more complex, original models that produce images. This is an example of a teacher-student model. The method, called distribution matching distillation (DMD), creates images significantly more quickly while maintaining the quality of the images.

This work shows a novel method to optimize existing diffusion models like Stable Diffusion and DALLE-3 by 30 times. It reduces computing time and retains, if not surpasses, the quality of the generated visual content.

The method combines the ideas of diffusion models and generative adversarial networks (GANs) to generate visual content in a single step, as opposed to the hundred stages of iterative refining that diffusion models currently require. It can be a brand-new generative modeling technique with exceptional speed and quality.

The DMD method has two components:

- Regression loss: Anchors the mapping to ensure a coarse organization of the space of images to make training more stable.

- Distribution matching loss: This ensures that the probability of generating a given image with the student model corresponds to its real-world occurrence frequency.

To accomplish this, two diffusion models are used as guidelines. These enable the system to distinguish between generated and real images and train the quick one-step generator.

The system achieves faster creation by training a new network to reduce the distribution divergence between its generated images and those from the training dataset utilized by classic diffusion models.

Tianwei Yin, an MIT PhD in electrical engineering and computer science, CSAIL affiliate, and the lead researcher on the DMD framework, said, “Our key insight is to approximate gradients that guide the improvement of the new model using two diffusion models. In this way, we distill the knowledge of the original, more complex model into the simpler, faster one while bypassing the notorious instability and mode collapse issues in GANs.”

The pre-train networks were used for the new student model, simplifying the entire process. Scientists then copied and fine-tuned the parameters from the original models to achieve fast training convergence of the latest model. Their new model can produce high-quality images with the same architectural foundation.

The DMD showed consistent performance when scientists tested the model against usual methods.

DMD is the first one-step diffusion technique that produces images on par with those from the original, more complex models on the popular benchmark of generating images based on specific classes on ImageNet. It achieves an impressively close Fréchet inception distance (FID) score of just 0.3, which is noteworthy since FID evaluates the quality and diversity of generated images.

DMD is also great at:

- Industrial-scale text-to-image generation.

- Achieves state-of-the-art one-step generation performance.

Furthermore, there is an inherent connection between the efficacy of the DMD-generated images and the teaching model’s capacities throughout the distillation process. In the current form, which employs Stable Diffusion v1.5 as the teacher model, the student inherits constraints, such as drawing small faces and detailed portrayals of text, indicating that more sophisticated teacher models could improve DMD-generated images even further.

Fredo Durand, MIT professor of electrical engineering and computer science and CSAIL principal investigator, said, “Decreasing the number of iterations has been the Holy Grail in diffusion models since their inception.”

“We are very excited to finally enable single-step image generation, which will dramatically reduce compute costs and accelerate the process.”

Alexei Efros, a professor of electrical engineering and computer science at the University of California at Berkeley who was not involved in this study said, “Finally, a paper that successfully combines the versatility and high visual quality of diffusion models with the real-time performance of GANs. I expect this work to open up fantastic possibilities for high-quality real-time visual editing.”

Journal Reference:

- Tianwei Yin, Michaël Gharbi, Richard Zhang, Eli Shechtman, Fredo Durand, William T. Freeman, Taesung Park. One-step Diffusion with Distribution Matching Distillation. DOI: 10.48550/arXiv.2311.18828