Designing new molecules for pharmaceuticals is primarily a manual, time-consuming process that’s prone to error. Frequently, scientists utilize expert knowledge and direct tweaking of particles, including and subtracting functional gatherings — atoms and bonds in charge of particular compound responses — one by one.

Regardless of whether they utilize systems that foresee ideal compound properties, chemists still need to do every change step themselves. This can take hours for every emphasis may in any case not create a substantial medication hopeful.

Now, MIT scientists have developed a model that better selects lead molecule candidates based on desired properties. It is a step forward toward fully automating the drug design process, which could drastically speed things up — and produce better results.

The model adjusts the sub-atomic structure expected to accomplish a higher strength while guaranteeing the particle is still synthetically legitimate.

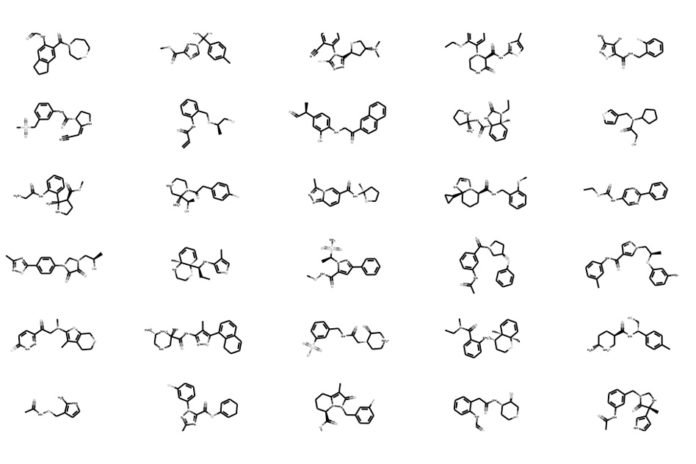

In order to offer molecular graphs with detailed representations of a molecular structure, the model accepts input molecular structure data. It then separates those graphs into smaller bunches of legitimate functional groups that it utilizes as “building blocks” that assist it all the more precisely to reconstruct and better modify molecules.

Wengong Jin, a Ph.D. student in CSAIL said, “The motivation behind this was to replace the inefficient human modification process of designing molecules with automated iteration and assure the validity of the molecules we generate.”

Regina Barzilay, the Delta Electronics Professor at CSAIL and EECS said, “Today, it’s really a craft, which requires a lot of skilled chemists to succeed, and that’s what we want to improve. The next step is to take this technology from academia to use on real pharmaceutical design cases, and demonstrate that it can assist human chemists in doing their work, which can be challenging.”

Tommi S. Jaakkola, the Thomas Siebel Professor of Electrical Engineering and Computer Science in CSAIL, EECS, and at the Institute for Data, Systems, and Society, said, “Automating the process also presents new machine-learning challenges. Learning to relate, modify, and generate molecular graphs drives new technical ideas and methods.”

Frameworks that endeavor to mechanize particle configuration have sprung up as of late, however, their concern is valid. Those systems, regularly create atoms that are invalid under chemical rules, and they neglect to deliver particles with ideal properties. This basically makes full computerization of particle outline infeasible.

These frameworks keep running on straight documentation of particles, called “simplified-input line-entry systems,” or SMILES, where long series of letters, numbers, and images represent individual atoms or bonds that can be deciphered by computer software.

As the system adjusts a lead particle, it grows its string representation symbol by sysmbol— atom by atom, and bond by bond— until the point that it creates a last SMILES string with higher intensity of a coveted property. At last, the system may create a last SMILES string that appears to be substantial under SMILES sentence structure, yet is really invalid.

Scientists sort this issue by developing the model that runs on molecular graphs, instead of SMILES strings. It works by encoding input molecule into a vector, which is basically a storage space for the molecule’s structural data, and then “decodes” that vector to a graph that matches the input molecule.

Jin explained, “At the encoding phase, the model breaks down each molecular graph into clusters, or “subgraphs,” each of which represents a specific building block. Such clusters are automatically constructed by a common machine-learning concept, called tree decomposition, where a complex graph is mapped into a tree structure of clusters — “which gives a scaffold of the original graph.”

“At decoding phase, the model reconstructs the molecular graph in a “coarse-to-fine” manner — gradually increasing a resolution of a low-resolution image to create a more refined version. It first generates the tree-structured scaffold and then assembles the associated clusters (nodes in the tree) together into a coherent molecular graph. This ensures the reconstructed molecular graph is an exact replication of the original structure.”

For lead optimization, the model would then be able to change lead particles in view of the desired property. It does as such with help of a prediction algorithm that scores every particle with a power estimation of that property. In the paper, for example, the specialists looked for atoms with a blend of two properties — high dissolvability and manufactured openness.

The model even optimizes a lead molecule by using the prediction algorithm to modify its vector — and, therefore, structure — by editing the molecule’s functional groups to achieve a higher potency score. It repeats this step for multiple iterations until it finds the highest predicted potency score. Then, the model finally decodes a new molecule from the updated vector, with a modified structure, by compiling all the corresponding clusters.

Training the model on 250,000 molecular graphs from the ZINC database. They tested the model on tasks to generate valid molecules, find the best lead molecules, and design novel molecules with increase potencies.

Jin said, “The researcher’s next aim to test the model on more properties, beyond solubility, which is more therapeutically relevant. That, however, requires more data. “Pharmaceutical companies are more interested in properties that fight against biological targets, but they have less data on those. A challenge is developing a model that can work with a limited amount of training data.”