Recently, suggestions have been made to directly infer holograms from three-dimensional (3D) data using deep learning for hologram calculations. This method, however, is expensive because it necessitates gathering depth data with an RGB-D camera for inference.

Researchers from Chiba University have developed a game-changing method that uses neural networks to convert standard two-dimensional color images into three-dimensional holograms, capitalizing on recent advances in deep learning.

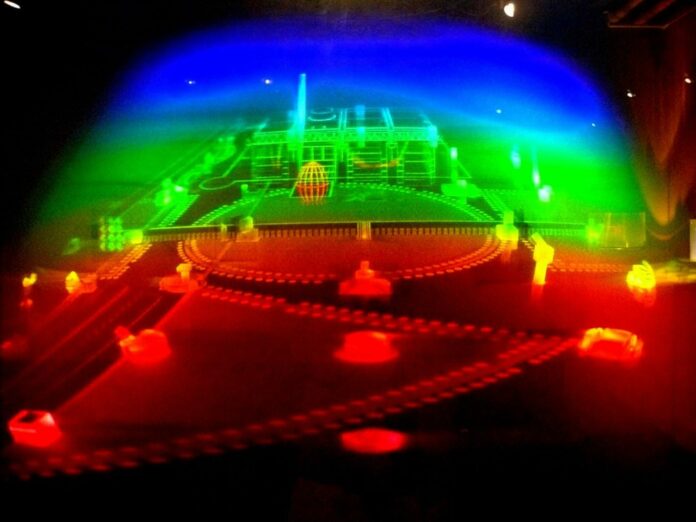

Holograms that show objects in three dimensions (3D) offer a level of detail that is impossible with conventional (2D) photographs. Holograms hold immense promise for usage in various industries, including medical imaging, manufacturing, and virtual reality, due to their capacity to provide a realistic and immersive view of 3D things.

Traditionally, holograms have been created by capturing an object’s three-dimensional information and light interactions with the object. However, this method is quite computationally intensive because a unique camera is needed to obtain the 3D images. This makes hologram generation difficult and restricts its wider application.

Now, a team of researchers led by Professor Tomoyoshi Shimobaba of the Graduate School of Engineering, Chiba University, propose a novel approach based on deep learning that further streamlines hologram generation by producing 3D images directly from regular 2D color images captured using ordinary cameras.

Prof. Shimobaba said, “There are several problems in realizing holographic displays, including the acquisition of 3D data, the computational cost

of holograms, and the transformation of hologram images to match the characteristics of a holographic display device. We undertook this study because we believe that deep learning has developed rapidly in recent years and can potentially solve these problems.”

Three deep neural networks (DNNs) are employed in the proposed method to convert a standard 2D color image into information that may be used to display a 3D scene or item as a hologram. The first DNN uses a color image taken with a standard camera as its input and then forecasts the corresponding depth map, giving insight into the image’s three-dimensional (3D) structure.

The second DNN then uses the first DNN’s depth map and the original RGB image to produce a hologram. The third DNN then refines the hologram created by the second DNN to make it acceptable for display on various gadgets.

Scientists found that the time taken by the proposed approach to process data and generate a hologram was superior to that of a state-of-the-art graphics processing unit.

Prof. Shimobaba, while discussing the results, further said, “Another noteworthy benefit of our approach is that the reproduced image of the final hologram can represent a natural 3D reproduced image. Moreover, since depth information is not used during hologram generation, this approach is inexpensive and does not require 3D imaging devices such as RGB-D cameras after training.”

This method could be used shortly to create high-fidelity 3D displays in heads-up and head-mounted displays. The development of an in-car holographic head-up display, which would give passengers the required information about people, highways, and signs in 3D, can also be revolutionized by it. Thus, the suggested method is anticipated to open up new opportunities for accelerating the development of pervasive holographic technology.

Journal Reference:

- Yoshiyuki Ishii, Fan Wang, Harutaka Shiomi et al. Multi-depth hologram generation from two-dimensional images by deep learning. Optics and Lasers in Engineering. DOI: 10.1016/j.optlaseng.2023.107758