In-person gatherings can be difficult to record due to the lack of control over the surrounding sounds. Unlike virtual meetings, where participants can easily mute themselves, there is no such option in a bustling cafe or a crowded room. Researchers have been grappling with the challenge of locating and controlling sound – isolating one person talking from a specific location in a crowded room, for instance – especially without the support of visual cues from cameras.

Now, a team of researchers at the University of Washington has developed a shape-changing smart speaker, which uses self-deploying microphones to divide rooms into speech zones and track the positions of individual speakers.

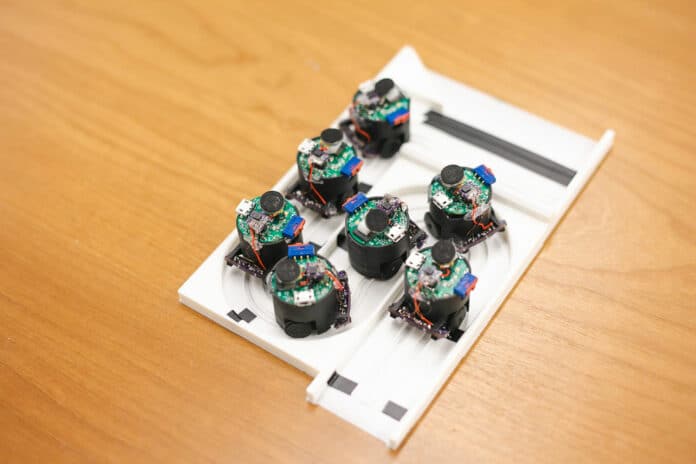

Thanks to the team’s intelligent deep-learning algorithms, the system enables users to mute certain areas or separate simultaneous conversations, even if two adjacent people have similar voices. The concept uses a swarm of seven little mic-bots on wheels, each about an inch in diameter. These microphones automatically deploy themselves from a charging station and create a self-optimizing array in the space available.

This feature allows the system to be conveniently moved between environments and set up automatically. For instance, such a system might be deployed in a conference room meeting instead of a central microphone, allowing better control of in-room audio.

The team’s prototype consists of seven small robots that spread themselves across tables of various sizes. They emit a high-frequency sound as they move from their charging point. Using this frequency and other sensors, the robots are able to avoid obstacles and move around without falling off the table.

The robots are automatically deployed to ensure maximum accuracy and greater sound control. This is more effective than if humans had to set them up. The robots disperse as far from each other as possible since greater distances make it easier to differentiate and locate people speaking.

The team tested the robots in various settings that included offices, living rooms, and kitchens, with groups of three to five people speaking. The results showed that the system was able to distinguish between different voices within a range of 1.6 feet (50 centimeters) with an accuracy of 90%, even without prior knowledge of the number of speakers. On average, the system was able to process three seconds of audio in 1.82 seconds, which is fast enough for live streaming but a bit too long for real-time communications such as video calls.

As the technology progresses, acoustic swarms might soon be put to use in smart homes to accurately differentiate humans talking with smart speakers. This could potentially enable only the people sitting in a particular area to effectively use voice commands to control a TV or other devices – making the experience more intuitive and personalized.

Researchers also acknowledge the potential for misuse, so they have included guards against this. The system uses microphones to navigate instead of an onboard camera, and the robots are visibly active with blinking lights. Unlike most smart speakers, the acoustic swarms process all the audio locally as a privacy constraint. Such technology could also be utilized as a surveillance tool, especially in situations where background noise may mask private conversations.

“It has the potential to actually benefit privacy beyond what current smart speakers allow,” said co-lead author Malek Itani in the press release. “I can say, ‘Don’t record anything around my desk,’ and our system will create a bubble 3 feet around me. Nothing in this bubble would be recorded. Or if two groups are speaking beside each other and one group is having a private conversation while the other group is recording, one conversation can be in a mute zone, and it will remain private.”

Journal reference:

- Malek Itani, Tuochao Chen, Takuya Yoshioka, and Shyamnath Gollakota. Creating speech zones with self-distributing acoustic swarms. Nature Communications, 2023; DOI: 10.1038/s41467-023-40869-8