Human faces are mechanical systems that use movements of the pliable facial tissues to convey language and emotional content. A thorough examination of the strain distributions associated with each facial action will benefit analytical and synthetical research on human faces, as each facial action creates a unique stress-strain field that defines the differentiated facial look.

A multi-institutional research team led by Osaka University has been studying the mechanical details of real human facial expressions to bring those stories closer to reality.

The researchers thoroughly examined 44 unique facial motions, such as blinking or lifting the corner of the mouth, using 125 tracking markers attached to the participant’s face.

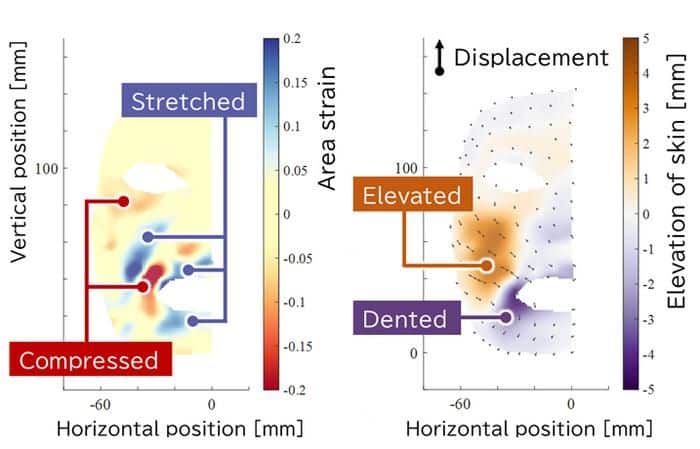

They investigated how much the facial skin surface is stretched and compressed in each facial region based on the evaluated area strains produced by each facial action. Scientists then examined the intricacy of the deformations on a face by visualizing the strain distributions and surface undulation.

The findings demonstrate how positive and negative surface strains mixed together on a face even during basic facial movements, possibly indicating the intricate facial structure beneath the layers of facial skin, which is made up of a variety of heterogeneously distributed tissues with various material characteristics, such as retaining ligaments and adipose tissues.

From an engineering perspective, our faces are amazing information display devices. By looking at people’s facial expressions, we can tell when a smile hides sadness or whether someone feels tired or nervous.

The data from this study can help researchers working with artificial faces, both created digitally on screens and, ultimately, the physical faces of android robots. Accurate and natural-looking artificial expressions can be achieved by taking precise measurements of human faces, which will help understand all the tensions and compressions in the facial structure.

Akihiro Nakatani, senior author, said, “The facial structure beneath our skin is complex. The deformation analysis in this study could explain how sophisticated expressions, which comprise both stretched and compressed skin, can result from deceivingly simple facial actions.”

Although this study has only looked at one person’s face thus far, the researchers intend to utilize their findings as a starting point to develop a more comprehensive knowledge of how people move their faces. This discovery could help avoid the dreaded “uncanny valley” effect by improving face motions in computer images, such as those used in movies and video games, and helping robots perceive and transmit emotion.

Journal Reference:

- Takeru MISU, Hisashi ISHIHARA, So NAGASHIMA, Yusuke DOI, Akihiro NAKATANI. Visualization and analysis of skin strain distribution in various human facial actions. Mechanical Engineering Journal. DOI: 10.1299/mej.23-00189