MIT scientists have come up with a new module that helps artificial intelligence systems called convolutional neural networks, or CNN’s, to fill in the gaps between video frames to greatly improve the network’s activity recognition. Scientists dubbed this module as Temporal Relation Network (TRN) that learns how objects change in a video at different times.

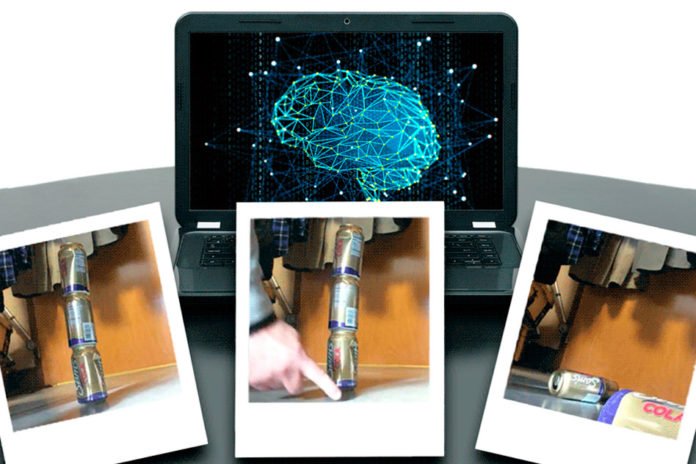

The module works by analyzing It does as such by analyzing a couple of keyframes depicting a movement at various phases of the video —, for example, stacked items that are then knocked down. Utilizing a similar procedure, it would then be able to perceive a similar sort of action in another video.

During the experiments, the module proficiently beats other models in recognizing hundreds of basic activities, such as poking objects to make them fall, tossing something in the air, and giving a thumbs-up. It precisely predicted what will happen next in a video — showing, for example, two hands making a small tear in a sheet of paper — given only a small number of early frames.

Bolei Zhou, a former Ph.D. student in the Computer Science and Artificial Intelligence Laboratory (CSAIL) said, “We built an artificial intelligence system to recognize the transformation of objects, rather than the appearance of objects. The system doesn’t go through all the frames — it picks up keyframes and, using the temporal relation of frames, recognize what’s going on. That improves the efficiency of the system and makes it run in real-time accurately.”

The researchers trained and tested their module on three crowdsourced datasets of short videos of various performed activities. The first dataset, called Something-Something, built by the company TwentyBN, has more than 200,000 videos in 174 action categories, such as poking an object so it falls over or lifting an object.

The second dataset, Jester, contains nearly 150,000 videos with 27 different hand gestures, such as giving a thumbs-up or swiping left. The third, Charades, built by Carnegie Mellon University researchers, has nearly 10,000 videos of 157 categorized activities, such as carrying a bike or playing basketball.

When given a video file, the researchers’ module simultaneously processes ordered frames — in groups of two, three, and four — spaced some time apart. Then it quickly assigns a probability that the object’s transformation across those frames matches a specific activity class.

For instance, if it processes two frames, where the later frame shows an object at the bottom of the screen and the earlier shows the object at the top, it will assign a high probability to the activity class, “moving object down.”

If a third frame shows the object in the middle of the screen, that probability increases even more, and so on. From this, it learns object-transformation features in frames that most represent a certain class of activity.

The module also outperformed models on forecasting an activity, given limited frames. After processing the first 25 percent of frames, the module achieved accuracy several percentage points higher than a baseline model.

Zhou said, “That’s important for robotics applications. You want [a robot] to anticipate and forecast what will happen early on when you do a specific action.”

Co-authors on the paper are CSAIL principal investigator Antonio Torralba, who is also a professor in the Department of Electrical Engineering and Computer Science; CSAIL Principal Research Scientist Aude Oliva; and CSAIL Research Assistant Alex Andonian.