The second law of thermodynamics establishes the concept of entropy. The law suggests that the total entropy of a closed process can increase or stay the same but never decrease.

Since at least the nineteenth century, physicists have been researching the role of entropy in information theory. They are studying the energy transactions of adding or erasing bits from computers, for example.

To better understand the connection between thermodynamics and information processing in computation, physicist and SFI resident faculty member David Wolpert in collaboration with Artemy Kolchinsky, physicist and former SFI postdoctoral fellow, conducted a new study in which they look at applying these ideas to a wide range of classical and quantum areas, including quantum thermodynamics.

Wolpert said, “Computing systems are designed specifically to lose information about their past as they evolve.”

The addition of 2 and 2 gives output as 4 in the calculator. While generating the output, the machine loses information about input as the same output can be obtained by 3+1. Just from the answer, the machine can’t report which pair of numbers acted as input.

In 1961, IBM physicist Rolf Landauer discovered that when information is erased, as during such a calculation, the entropy of the calculator decreases (by losing information), which means the environment’s entropy must increase.

Erasing a bit of information requires generating a little bit of heat.

Scientists wanted to determine the energy cost of erasing information for a given system.

Landauer derived an equation for the minimum amount of energy that is produced during erasure. In this study, the SFI duo found that most systems produce more.

Kolchinsky said, “The only way to achieve Landauer’s minimum loss of energy is to design a computer with a certain task in mind. If the computer carries out some other calculation, then it will generate additional entropy. Kolchinsky and Wolpert have demonstrated that two computers might carry out the same calculation, for example, but differ in entropy production because of their expectations for inputs. The researchers call this a “mismatch cost,” or the cost of being wrong.”

“It’s the cost between what the machine is built for and what you use it for.”

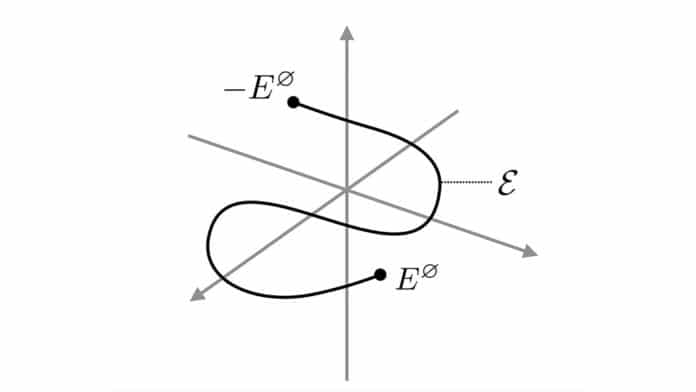

In previous studies, scientists demonstrated that this mismatch cost could be found in several systems, not just in information theory. They’ve discovered a fundamental relationship between thermodynamic irreversibility—the case in which entropy increases — and logical irreversibility — the case in computation in which the initial state is lost.

This way, they could strengthen the second law of thermodynamics.

They also discovered that this fundamental relationship extends even more broadly than previously believed.

Kolchinsky said, “A better understanding of mismatch cost could lead to a better understanding of how to predict and correct those errors.”

“There’s this deep relationship between physics and information theory.”

Journal Reference:

- Artemy Kolchinsky and David H. Wolpert et al. Entropy production given constraints on the energy functions. DOI: 10.1103/PhysRevE.104.034129