We, humans, are capable of recognizing our surrounding objects since our early age. This ability to interact with objects in our environments plays a vital role in the emergence of object perception and manipulation capabilities.

This also benefits us by teaching self-supervision- what actions we shall take and learn from the result. This is the case in humans, whereas, in robots, this capability is actually researched. It enables robots to learn without the need for large amounts of training data or manual supervision.

Considering the fact of object permanence, Google scientists now have proposed a new system called Grasp2Vec, an algorithm to gather object representation.

Grasp2Vec is based on the intuition that an attempt to pick up anything provides several pieces of information — if a robot grasps an object and holds it up, the object had to be in the scene before the grasp.

Eric Jang, Software Engineer, Robotics at Google and Coline Devin, Berkeley Ph.D. Student and former Research Intern wrote, “In robotics, this type of … learning is actively researched because it enables robotic systems to learn without the need for large amounts of training data or manual supervision. By using this form of self-supervision, machines like robots can learn to recognize … objects by … visual change[s] in the scene.”

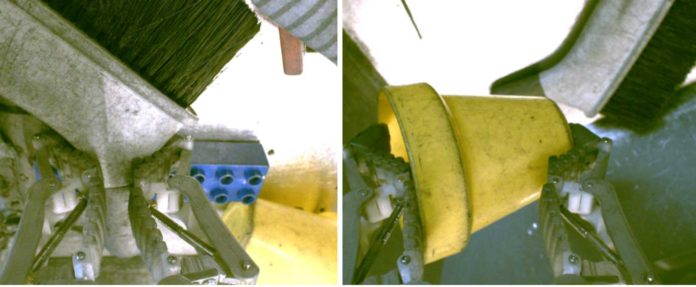

Collaborating with X Robotics, scientists taught a robotic arm to grasp objects unintentionally, and that experience enables the learning of a rich representation of objects. Those representations eventually led to “intentional grasping” of tools and toys chosen by the scientists.

Scientists then used reinforcement learning to encourage the arm to grasp objects by inspecting them using its camera and answer basic object recognition questions. They then implemented a perception system to extract meaningful information about the items by analyzing a series of three images: an image before grasping, an image after grasping, and an isolated view of the grasped object.

In tests, Grasp2Vec and the researchers’ novel policies achieved a success rate of 80 percent on objects seen during data collection and 59% on novel objects the robot hasn’t encountered before.

Scientists noted, “We show how robotic grasping skills can generate the data used for learning object-centric representations. We then can use representation learning to ‘bootstrap’ more complex skills like instance grasping, all while retaining the self-supervised learning properties of our autonomous grasping system.”

“Going forward, we are excited not only for what machine learning can bring to robotics by way of better perception and control, but also what robotics can bring to machine learning in new paradigms of self-supervision.”