Individuals with debilitating neurodegenerative disease frequently experience communication loss, which has a negative impact on their quality of life. Decoding brain signals directly to allow neural voice prosthesis is one way to let communication return. Unfortunately, coarse neural recordings that fall short of capturing the complex spatiotemporal structure of human brain signals have limited decoding efforts.

To resolve this limitation, a collaborative team of Duke neuroscientists, neurosurgeons, and engineers have developed a speech prosthetic that can translate a person’s brain signals into what they’re trying to say. This technology might one day help people unable to talk due to neurological disorders regain the ability to communicate through a brain-computer interface.

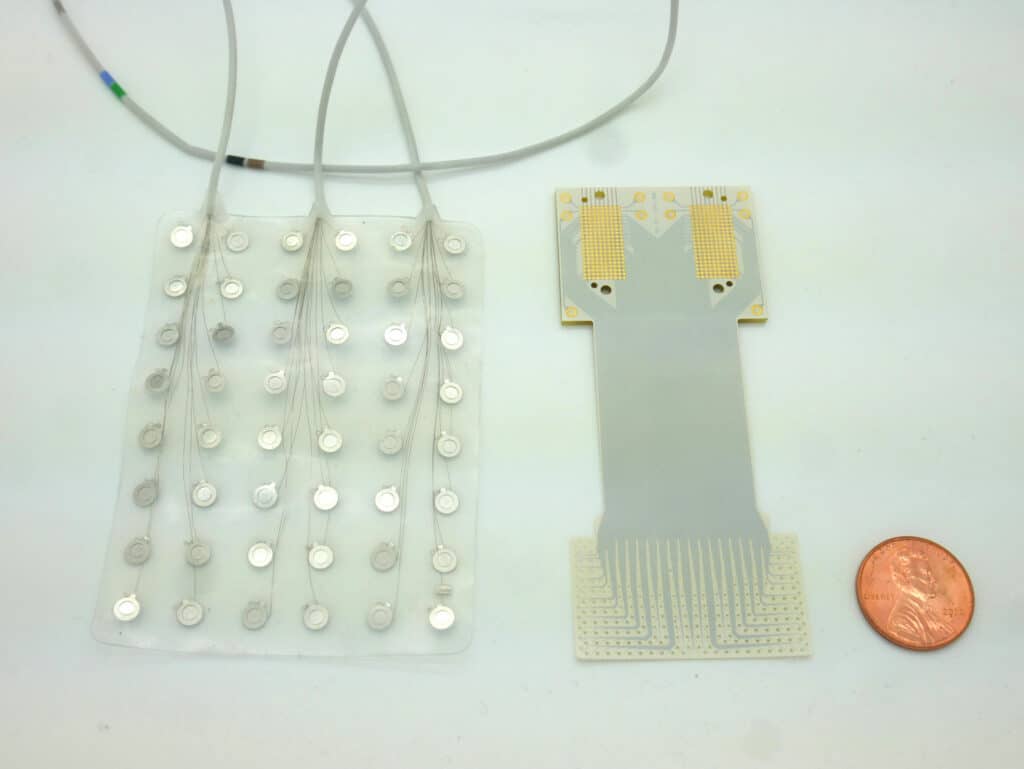

A remarkable 256 minuscule brain sensors were crammed onto a flexible, medical-grade plastic piece the size of a postage stamp by scientists. To make accurate predictions about intended speech, it is vital to separate signals from neighboring brain cells, as neurons that are only a few sand grains apart can have dramatically diverse activity patterns when coordinating speech.

After fabricating the new implant, scientists temporarily placed the device in patients who were undergoing brain surgery for some other condition, such as treating Parkinson’s disease or having a tumor removed.

Gregory Cogan, Ph.D., a professor of neurology at Duke University’s School of Medicine, said, “I like to compare it to a NASCAR pit crew. We didn’t want to add extra time to the operating procedure, so we had to be in and out within 15 minutes. As soon as the surgeon and the medical team said ‘Go!’ we rushed into action, and the patient performed the task.”

It was an easy listen-and-repeat exercise. After hearing a string of absurd phrases like “ava,” “kug,” or “vip,” participants had to say each one out loud. As the device coordinated the movement of around 100 muscles that move the lips, tongue, jaw, and larynx, it recorded activity from the speech motor cortex of each patient.

Next, using simply the recordings of brain activity, scientists loaded a machine learning system with the neural and speech data from the operating room to test how well it could identify the sound being created.

When a sound was the first of three sounds that made up a given nonsense word, such as the sound /g/ in the word “gak,” the decoder correctly identified it 84% of the time.

However, as the decoder separated sounds that came either before or after a nonsense word, accuracy decreased. It also has trouble matching similar sounds, such as /p/ and /b/.

In general, 40% of the time, the decoder was accurate. Even though that test result might not seem remarkable, it was pretty impressive considering that comparable brain-to-speech technical achievements need hours or even days of data to process.

Cogan said, “We’re now developing the same recording devices without wires. You’d be able to move around, and you wouldn’t have to be tied to an electrical outlet, which is exciting.”

Journal Reference:

- Duraivel, S., Rahimpour, S., Chiang, CH. et al. High-resolution neural recordings improve the accuracy of speech decoding. Nat Commun 14, 6938 (2023). DOI: 10.1038/s41467-023-42555-1