Google has recently launched a What-if tool as a new feature of the open-source TensorBoard web application, which let users analyze a machine learning model without writing code. Offered pointers to a TensorFlow model and a dataset, the What-If Tool offers an intelligent visual interface for investigating model outcomes.

The features of What-if tool includes visualizing your dataset automatically using Facets, the ability to manually edit examples from your dataset and see the effect of those changes, and automatic generation of partial dependence plots which show how the model’s predictions change as any single feature is changed.

With a click of a button, you can contrast a data point with the most comparable point where the model predicts an alternate outcome. Such points are called counterfactuals and they can highlight on the decision boundaries of your model. Users can also edit a data point by hand and explore how the model’s prediction changes.

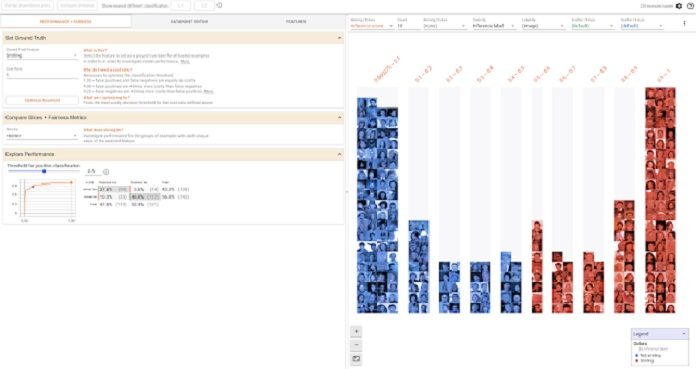

Moreover, the model can also be used to explore the effects of different classification thresholds, taking into account constraints such as different numerical fairness criteria.

The tool acts as a multiclass classification model, which predicts plant type from four measurements of a flower from the plant. The tool is helpful in showing the decision boundary of the model and what causes misclassifications.

The tool is helpful in assessing algorithmic fairness across different subgroups. The model was purposefully trained without providing any examples from a specific subset of the population, in order to show how the tool can help uncover such biases in models.

A regression model that predicts a subject’s age from census information. The tool is helpful in showing relative performance of the model across subgroups and how the different features individually affect the prediction.

The tool is tested inside Google– it offered the immediate value of such a tool. One team quickly found that their model was incorrectly ignoring an entire feature of their dataset, leading them to fix a previously-undiscovered code bug. Another team used it to visually organize their examples from best to worst performance, leading them to discover patterns about the types of examples their model was underperforming on.