Our environment is filled with rich and dynamic acoustic information. While recent advances in learned implicit functions have led to increasingly higher-quality representations of the visual world, there have not been commensurate advances in earning spatial auditory representations.

Recently, scientists at MIT and the MIT-IBM Watson AI Lab have developed a machine-learning model that can capture how any sound in a room will propagate through space. This enables the model to simulate what a listener would hear at different locations.

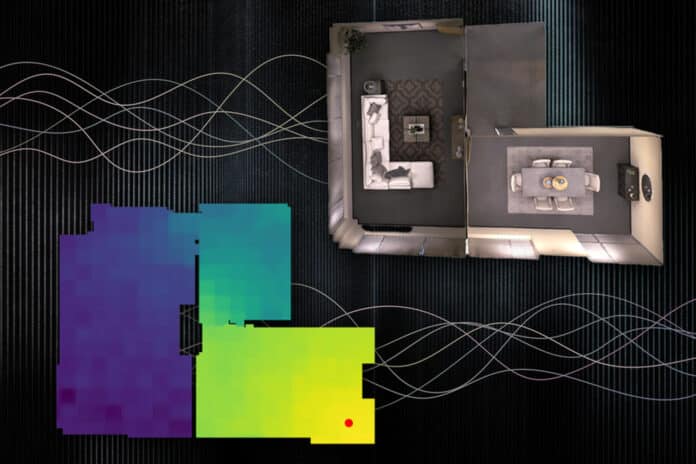

The model precisely models the acoustics of a scene and determines the underlying 3D geometry of a room from sound recordings. Like humans utilize sound to infer the characteristics of their physical environment, scientists can create precise visual representations of a space using the acoustic data their system collects.

Yilun Du, a grad student in the Department of Electrical Engineering and Computer Science (EECS) and co-author of a paper describing the model, said, “In addition to its potential applications in virtual and augmented reality, this technique could help artificial intelligence agents develop better understandings of the world around them. For instance, by modeling the acoustic properties of the sound in its environment, an underwater exploration robot could sense things farther away than it could with vision alone.”

“Most researchers have only focused on modeling vision so far. But as humans, we have multimodal perception. Not only is vision important, but the sound is also important. This work opens up an exciting research direction on better-utilizing sound to model the world.”

When scientists employed the model to capture how sound travels through the scene, they found that vision models benefit from a property known as photometric consistency, which does not apply to sound. The identical thing appears to be about the same when viewed from two different angles. However, when it comes to sound, other locations could result in entirely different sounds due to obstructions, distance, etc. As a result, audio prediction is quite challenging.

Scientists overcame this problem by incorporating two properties of acoustics into their model: the reciprocal nature of sound and the influence of local geometric features.

They enhance the neural network with a grid that records objects and architectural characteristics in the image, such as doorways or walls, to incorporate these two factors in their model, known as a neural acoustic field (NAF). To learn the characteristics at particular places, the model randomly samples points on that grid.

Luo said, “If you imagine standing near a doorway, what most strongly affects what you hear is the presence of that doorway, not necessarily geometric features far away from you on the other side of the room. We found this information enables better generalization than a simple fully connected network.”

Scientists can provide the NAF with visual data about a scene and a few spectrograms that illustrate how an audio recording might sound when the emitter and listener are situated at specific points around the room. The algorithm then forecasts what the audio would sound like at any location in the scenario where the listener might move.

The NAF produces an impulse response that depicts how a sound should alter as it spreads throughout the environment. To determine how different sounds should alter when a person passes around a room, the scientists then apply this impulse response to various noises.

The scientists found that their strategy consistently produced more precise sound models when compared to other techniques that model acoustic data. Additionally, their model outperformed different approaches in its ability to generalize to other locations in a scene because it learned local geometric information.

Moreover, they found that applying the acoustic information their model learns to a computer vision model can lead to a better visual reconstruction of the scene.

Du says, “When you only have a sparse set of views, using these acoustic features enables you to capture boundaries more sharply, for instance. And maybe this is because to render the acoustics of a scene accurately, you have to capture the underlying 3D geometry of that scene.”

The researchers will further improve the model so that it may be applied to fresh scenes. Additionally, they aim to use this method for more involved impulsive reactions and bigger scenarios, such as entire buildings or even a whole town or metropolis.

Gan said, “This new technique might open up new opportunities to create an immersive multimodal experience in the metaverse application.”

Dinesh Manocha, the Paul Chrisman Iribe Professor of Computer Science and Electrical and Computer Engineering at the University of Maryland, said, “My group has done a lot of work on using machine-learning methods to accelerate acoustic simulation or model the acoustics of real-world scenes. This paper by Chuang Gan and his co-authors is a major step forward in this direction. In particular, this paper introduces a nice implicit representation that can capture how sound can propagate in real-world scenes by modeling it using a linear time-invariant system. This work can have many applications in AR/VR and real-world scene understanding.”

Journal Reference:

- Andrew Luo et al. Learning Neural Acoustic Fields. arXiv: 2204.00628