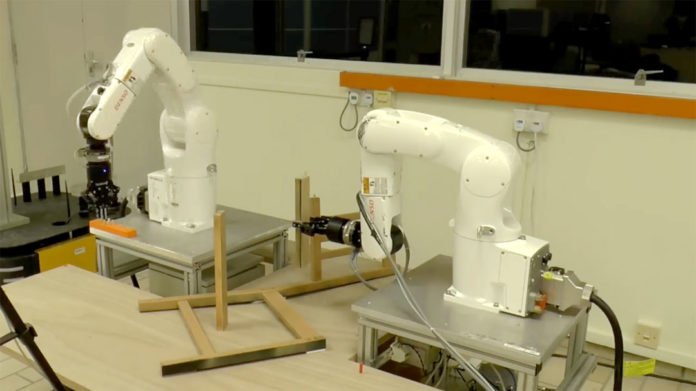

Scientists at the Nanyang Technological University, Singapore (NTU Singapore) have developed a robot that can autonomously assemble an IKEA chair without interruption. It consists of a 3D camera and two robotic arms equipped with grippers to pick up objects.

The robot work relies on 3 algorithms that use three different open-source libraries to help the robot complete its job of putting together the IKEA chair.

Assistant Professor Pham Quang Cuong said, “For a robot, putting together an IKEA chair with such precision is more complex than it looks. The job of assembly, which may come naturally to humans, has to be broken down into different steps, such as identifying where the different chair parts are, the force required to grip the parts, and making sure the robotic arms move without colliding into each other. Through considerable engineering effort, we developed algorithms that will enable the robot to take the necessary steps to assemble the chair on its own.”

It assembled IKEA’s Stefan chair in 8 minutes and 55 seconds. Prior to the assembly, the robot took 11 minutes and 21 seconds to independently plan the motion pathways and 3 seconds to locate the parts.

“We are looking to integrate more artificial intelligence into this approach to make the robot more autonomous so it can learn the different steps of assembling a chair through human demonstration or by reading the instruction manual, or even from an image of the assembled product.”

The robots act as the same way to the genericity of the human “hardware” for assembling objects. The ‘eyes’ through a 3D camera and the ‘arms’ through industrial robotic arms that are capable of six-axis motion. Each arm is equipped with parallel grippers to pick up objects. Mounted on the wrists are force sensors that determine how strongly the “fingers” are gripping and how powerfully they push objects into contact with each other.

The robot begins the assembly the process by taking 3D photographs of the parts laid out on the floor to create a guide of the evaluated places of the diverse parts. This is to duplicate, however much as could reasonably be expected, the jumbled condition after people unpack and get ready to assemble a fabricate it-yourself seat. The test here is to decide an adequately exact localization in a jumbled domain rapidly and dependable.

Next, utilizing algorithms created by the group, the robot designs a two-gave movement that is quick and crash free. This movement pathway should be incorporated with visual and material recognition, getting a handle on and execution.

To ensure that the mechanical arms can grasp the pieces firmly and perform assignments, for example, embeddings wooden fittings, the measure of power applied must be directed. This is testing in light of the fact that modern robots, intended to be exact at situating, are terrible at managing powers, Asst Prof Pham clarified.

Currently, the robot is being used to investigate able control, a region of mechanical technology that requires exact control of powers and movements with fingers or concentrated automated hands. Accordingly, the robot is more human-like in its control of items.

Pham explained, “One reason could be that complex manipulation tasks in human environments require many different skills. This includes being able to map the exact locations of the items, plan a collision-free motion path, and control the amount of force required. On top of these skills, you have to be able to manage their complex interactions between the robot and the environment.”

“The way we have built our robot, from the parallel grippers to the force sensors on the wrists, all work towards manipulating objects in a way humans would.”

Scientists are exploring to deploy the robot to do glass bonding that could be useful in the automotive industry, and drilling holes in metal components for the aircraft manufacturing industry.

The results are published in the journal Science Robotics.