For decades, robots in controlled situations like sequential construction systems have possessed the capacity to get a similar protest again and again. All the more as of late, leaps forward in computer vision have empowered robots to make fundamental qualifications between objects. And still, at the end of the day, however, the systems don’t really comprehend articles’ shapes, so there are little the robots can do after a quick pick-up.

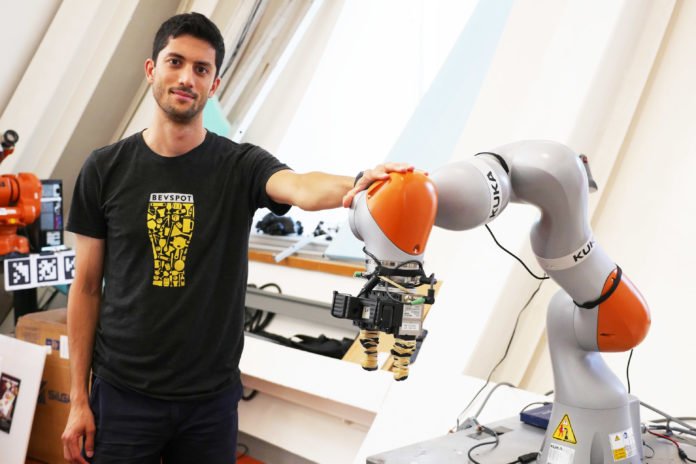

In a new study, MIT scientists have developed a system that lets robots inspect random objects, and visually understand them enough to accomplish specific tasks without ever having seen them before. The system dubbed Dense Object Nets (DON) takes a gander at objects as accumulations of focuses that fill in as kind of visual roadmaps.

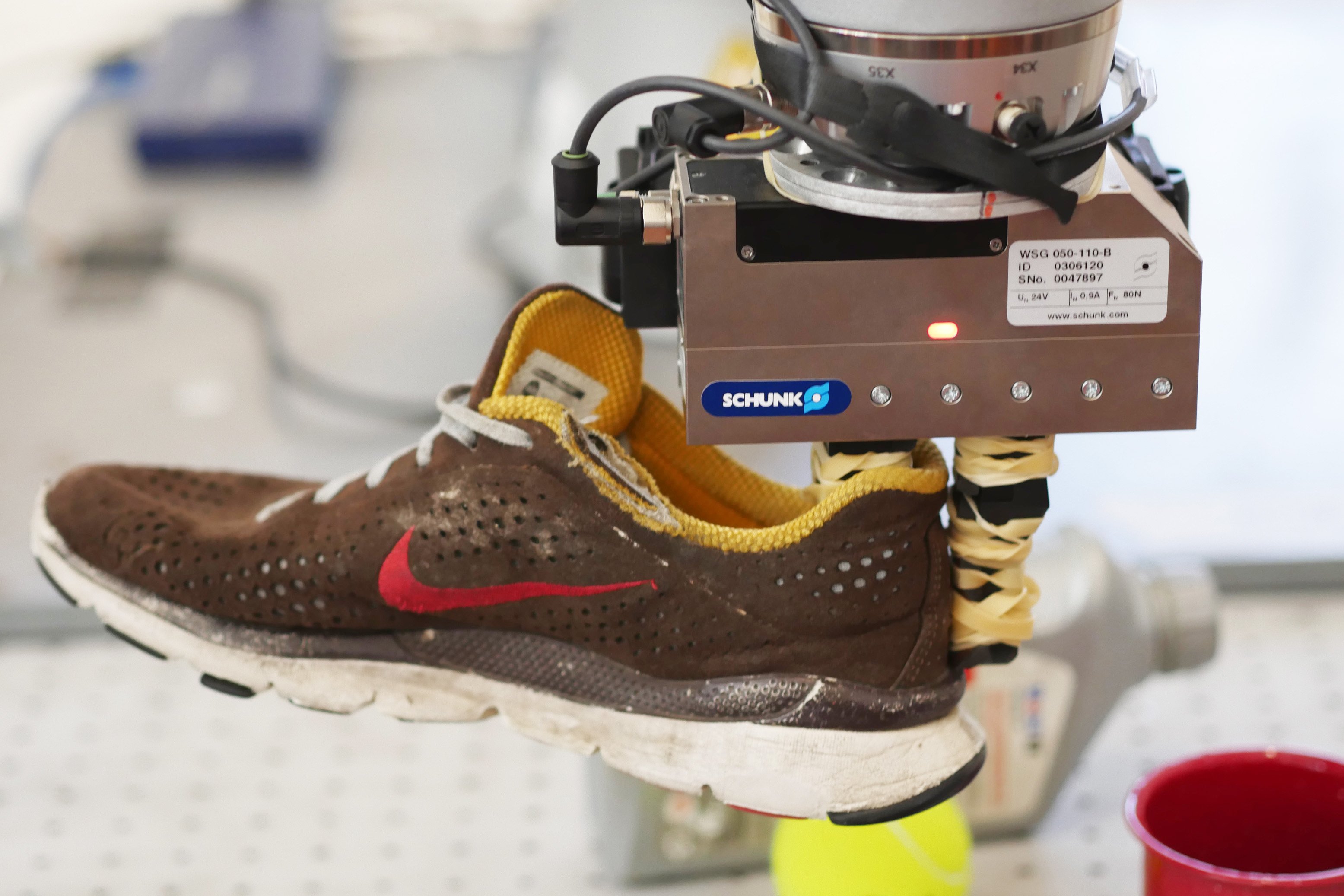

This approach gives robots a chance to more readily comprehend and control things, and, above all, enables them to try and get a particular question among a cluster of similar— a significant ability for the sorts of machines that organizations like Amazon and Walmart use in their stockrooms.

PhD student Lucas Manuelli said, “Many approaches to manipulation can’t identify specific parts of an object across the many orientations that object may encounter. For example, existing algorithms would be unable to grasp a mug by its handle, especially if the mug could be in multiple orientations, like upright, or on its side.”

What’s also noteworthy is that none of the data was actually labeled by humans. Instead, the system is what the team calls “self-supervised,” not requiring any human annotations.

Though, the system essentially creates a series of coordinates on a given object, which serves as a kind of visual roadmap, to give the robot a better understanding of what it needs to grasp, and where.

The team trained the system to look at objects as a series of points that make up a larger coordinate system. It can then map different points together to visualize an object’s 3-D shape, similar to how panoramic photos are stitched together from multiple photos. After training, if a person specifies a point on an object, the robot can take a photo of that object, and identify and match points to be able to then pick up the object at that specified point.

When testing on a bin of different baseball hats, DON could pick out a specific target hat despite all of the hats having very similar designs — and having never seen pictures of the hats in training data before.