Analysts from Brown University and MIT have built up a strategy for helping robots get ready for multi-step undertakings by developing theoretical portrayals of their general surroundings. Their investigation, distributed in the Journal of Artificial Intelligence Research, is a stage toward building robots that can think and act more like individuals.

Arranging is a fantastically troublesome thing for robots, to a great extent due to how they see and communicate with the world. A robot’s impression of the world comprises of just the huge swath of pixels gathered by its cameras, and its capacity to act is constrained to set the places of the individual engines that control its joints and grippers. It does not have an inborn comprehension of how those pixels identify with what we should think about significant ideas on the planet.

“That low-level interface with the world makes it extremely difficult to do choose what to do,” said George Konidaris, a right-hand teacher of software engineering at Brown and the lead creator of the new investigation.

“Envision how hard it is to design something as basic as a trek to the supermarket if you needed to consider every single muscle you’d flex to arrive, and envision ahead of time and in detail the terabytes of visual information that would go through your retinas en route. You’d quickly get stalled in the details. Individuals, obviously, don’t arrange for that way. We’re ready to present digest ideas that cast off that enormous mass of unessential detail and concentrate on what is essential.”

Indeed, even best-in-class robots aren’t equipped for that sort of reflection. When we see exhibits of robots making arrangements for and performing multistep assignments, “it’s quite often the case that a software engineer has expressly informed the robot how to think concerning the world with the goal for it to make an arrangement,” Konidaris said.

“In any case, on the off chance that we need robots that can demonstrate all the more self-rulingly, they will require the capacity to learn deliberations all alone.”

In software engineering terms, these sorts of deliberations fall into two classes: “procedural reflections” and “perceptual reflections.” Procedural reflections are programs made out of low-level developments formed into more elevated amount abilities. An illustration would package all the little developments expected to open an entryway — all the engine developments engaged with going after the handle, turning it and pulling the entryway open — into a solitary “open the entryway” aptitude.

Once such an expert is assembled, you don’t have to stress over how it functions. All you have to know is when to run it. Roboticists — including Konidaris himself — have been contemplating how to influence robots to learn procedural reflections for a considerable length of time, he says.

However, as indicated by Konidaris, there’s been less advance in perceptual reflection, which needs to do with helping a robot comprehend its pixelated environment. That is the focal point of this new research.

“Our work demonstrates that once a robot has abnormal state engine aptitudes, it can consequently build a perfect abnormal state emblematic portrayal of the world — one that is probably reasonable for arranging to utilize those abilities,” Konidaris said.

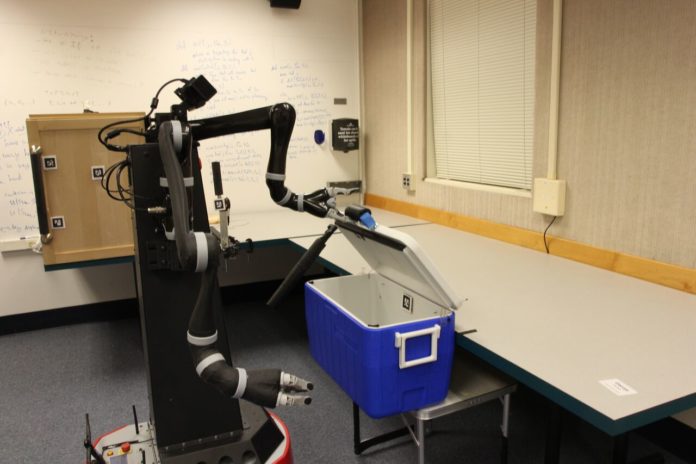

For the investigation, the specialists presented a robot named Anathema Device (or Ana, for short) to a room containing a pantry, a cooler, a switch that controls a light inside the cabinet, and a container that could be left in either the cooler or the organizer. They gave Ana an arrangement of abnormal state engine aptitudes for controlling the items in the room—opening and shutting both the cooler and the organizer, flipping the switch and getting a container.

At that point they turned Ana free to experiment with her engine aptitudes in the room, recording the tactile information from her cameras and actuators when every ability execution. That information was encouraged into the machine-learning calculation created by the group.

The scientists demonstrated that Ana could take in an exceptionally dynamic portrayal of the condition that contained just what was important for her to be capable play out a specific ability. For instance, she discovered that keeping in mind the end goal to open the cooler, she should have been remaining before it and not hold anything (since she required the two hands to open the cover). She likewise took in the best possible design of pixels in her visual field related to the cooler cover being shut, which is the main setup in which it’s conceivable to open it.

Scientists also learned comparative reflections related to her different abilities. She learned, for instance, that the light inside organizer was bright to the point that it whited out her sensors. So to control the jug inside the pantry, the light must be off. She likewise discovered that keeping in mind the end goal to kill the light, the pantry entryway should have been shut, on the grounds that the open entryway obstructed her entrance to the switch. The subsequent unique portrayal refined all that learning down from top-notch pictures to a content record, only 126 lines in length.

Konidaris said, “These were all the important abstract concepts about her surroundings. Doors need to be closed before they can be opened. You can’t get the bottle out of the cupboard unless it’s open, and so on. And she was able to learn them just by executing her skills and seeing what happens.”

When Ana was furnished with her educated unique portrayal, the analysts requesting that she accomplish something that required some arranging: take the jug from the cooler and place it in the cabinet.

As they trusted she would, Ana explored to the cooler and opened it to uncover the container. In any case, she didn’t lift it up. Rather, she prepared. She understood that in the event that she had the container in her gripper, at that point, she wouldn’t have the capacity to open the pantry, in light of the fact that doing so requires the two hands. So after she opened the cooler, she explored to the pantry.

There she saw that the light switch in the “on” position and she understood that opening the organizer would obstruct the switch, so she killed the switch before opening the pantry, coming back to the cooler and recovering the jug, and lastly setting it in the cabinet. So, she prepared, recognizing and settling issues before they could happen.

Konidaris said, “We didn’t provide Ana with any of the abstract representations she needed to plan for the task. She learned those abstractions on her own, and once she had them, planning was easy. She found that plan in only about four milliseconds.”