Scientists have built up an unbiased framework model to assess the soundness of populaces in some U.S. urban areas construct just with respect to the huge factors communicated inaccessible information. Their fair system based probabilistic way to deal with mine huge information could be utilized to survey other complex frameworks, for example, positioning colleges or assessing sea maintainability.

Social orders today are information-rich, which can both enable and overpower. Filtering through this information to figure out which factors to use for the evaluation of something like the strength of a city’s populace is testing. Analysts frequently pick these factors in view of their own involvement. They may choose that grown-up heftiness rates, death rates, and future are vital factors for computing a summed up metric of the occupants’ general wellbeing. Be that as it may, are these the best factors to utilize? Are there other more vital ones to consider?

Matteo Convertino of Hokkaido University in Japan and Joseph Servadio of the University of Minnesota in the U.S. have presented a novel probabilistic strategy that permits the perception of the connections between factors in big data for complex frameworks. The approach depends on “most extreme exchange entropy”, which probabilistically measures the quality of connections between various factors after some time.

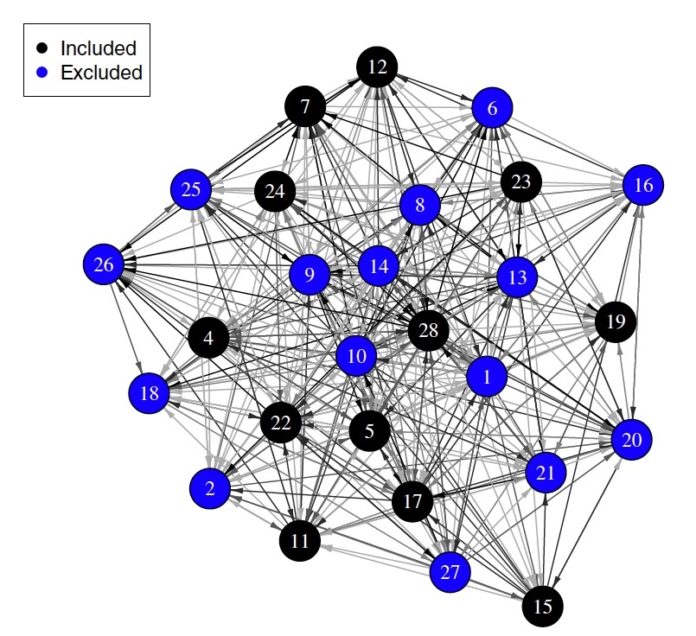

Utilizing this technique, Convertino and Servadio mined through a lot of wellbeing information in the U.S. to assemble a “most extreme entropy organize” (MENet): a model made out of hubs speaking to wellbeing related factors, and lines interfacing the factors. The lines are darker the more grounded the association between two factors. This enabled the scientists to manufacture an “Ideal Information Network” (OIN) by picking the factors that had the most down to earth significance for surveying the well-being status of populaces in 26 U.S. urban areas from 2011 to 2014. By consolidating the information from each chose a variable, the analysts could register a “coordinated wellbeing esteem” for every city. The higher the number, the less solid a city’s populace.

They found that a few urban communities, for example, Detroit, had poor general wellbeing amid that time allotment. Others, for example, San Francisco, had low esteemed, demonstrating more positive wellbeing results. A few urban communities indicated high inconstancy over the four-year time frame, for example, Philadelphia. Cross-sectional correlations demonstrated propensities for California urban communities to score superior to different parts of the nation. Additionally, Midwestern urban areas, including Denver, Minneapolis, and Chicago, seemed to perform inadequately contrasted with different districts, in spite of national city rankings.

Convertino trusts that strategies like this, encouraged by vast informational collections and broke down by means of robotized stochastic PC models, could be utilized to streamline research and practice; for instance for controlling ideal choices about wellbeing. “These devices can be utilized by any nation, at any managerial level, to process information progressively and help customize restorative endeavors,” says Convertino.

However, it isn’t only for wellbeing — “The model can be connected to any unpredictable framework to decide their Optimal Information Network, in fields from environment and science to fund and innovation. Unwinding their complexities and creating fair foundational pointers can help enhance basic leadership forms,” Convertino included.

The study is distributed online in Science Advances.