MIT scientists at the code.

After testing it on a set of benchmark algorithms that are standard in the field, scientists found that the system could allow taking benefit of all practical parallelism. The system enabled more than 10-fold speedup over existing systems that adopt the same practical parallelism strategy, with a maximum of 88-fold.

After decades of research, the best parallel implementation of one common max-flow algorithm achieves only an eightfold speed up when it’s run on 256 parallel processors. With the researchers’ new system, the improvement is 322-fold. And the most fascinating is, the program required only one-third as much code.

Daniel Sanchez, an assistant professor of electrical engineering and computer science at MIT said, “In a conventional parallel program, you need to divide your work into tasks. But because these tasks are operating on shared data, you need to introduce some synchronization to ensure that the data dependencies that these tasks have been respected. From the mid-90s to the late 2000s, there were multiple waves of research in what we call speculative architectures. What these systems do is execute these different chunks in parallel, and if they detect a conflict, they abort and roll back one of them.”

Although, the system uses a parallelism strategy known as speculative execution. In reality, scientists did few modifications in the system of a circuit already found in Swarm, the researchers’ earlier speculative-execution system. Every task executed in Swarm receives a time stamp, and if two tasks attempt to access the same memory location, the one with the later timestamp is aborted and re-executed.

Atomic tasks are often fairly substantial. But with speculative execution, it also introduces inefficiencies. Fractal has solved that problems too. With Fractal, a programmer can add a line of code to each subroutine within an atomic task that can be executed in parallel.

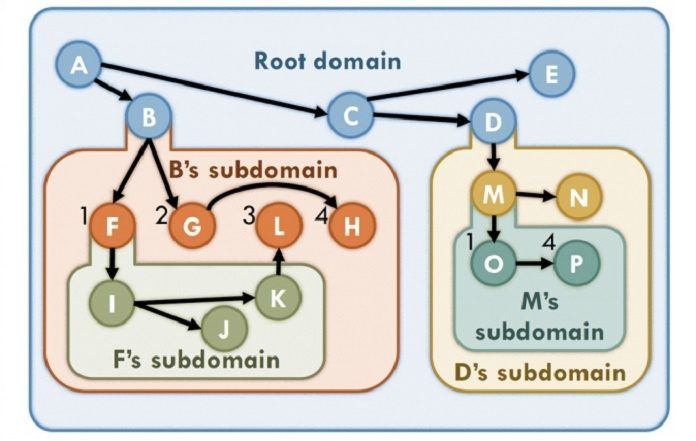

Fractal assigns each task in its own time stamp. But if an atomic task has a parallelizable subroutine, the subroutine’s time stamp includes that of the task that spawned it. And if the subroutine, in turn, has a parallelizable subroutine, the second subroutine’s time stamp includes that of the first, and so on. In this way, the ordering of the subroutines preserves the ordering of the atomic tasks.

Although the Fractal simply moves the front of the time-stamp train into storage. Meanwhile, the Fractal is always working only on the lowest-level and avoids the problem of aborting large, high-level atomic tasks.