Machine intelligence has become a driving factor in modern society. However, its demand outpaces the underlying electronic technology due to fundamental physics limitations, such as capacitive charging of wires and system architecture of storing and handling data, both driving recent trends toward processor heterogeneity.

Optical alternatives to electronic hardware could help speed up machine learning processes by simplifying how information is processed in a non-iterative way. However, photonics-based machine learning is typically limited by the number of components placed on photonic integrated circuits, limiting the interconnectivity. At the same time, free-space spatial-light-modulators are restricted to slow programming speeds.

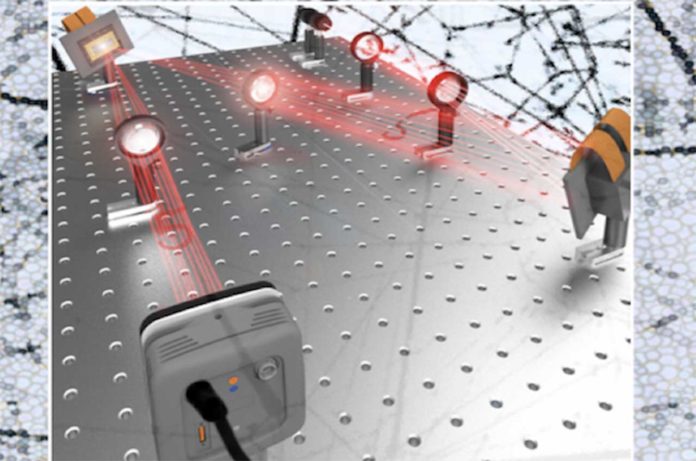

To achieve a breakthrough, scientists at the George Washington University, in collaboration with the University of California, Los Angeles, and the deep-tech venture startup Optelligence LLC, have developed an optical convolutional neural network accelerator capable of processing large amounts of information, on the order of petabytes, per second. This optical convolutional neural network accelerator harnesses the massive parallelism of light, taking a step toward a new era of optical signal processing for machine learning.

Scientists developed this system by using digital mirror-based technology instead of spatial light modulators to make the system 100 times faster.

The non-iterative timing of this processor, in combination with rapid programmability and massive parallelization, empowers this optical machine learning system to beat even the best in class illustrations preparing units by over an order of magnitude, with space for further optimization beyond the initial prototype.

Unlike the current paradigm in electronic machine learning hardware that processes information sequentially, this processor uses the Fourier optics, a concept of frequency filtering that allows for performing the neural network’s required convolutions as much simpler element-wise multiplications using the digital mirror technology.

Volker Sorger, associate professor of electrical and computer engineering at the George Washington University, said, “This massively parallel amplitude-only Fourier optical processor is heralding a new era for information processing and machine learning. We show that training this neural network can account for the lack of phase information.”

Puneet Gupta, professor & vice chair of computer engineering at UCLA, said, “Optics allows for processing large-scale matrices in a single time-step, which allows for new scaling vectors of performing convolutions optically. This can have significant potential for machine learning applications, as demonstrated here.”

Hamed Dalir, Co-founder, Optelligence LLC, said, “This prototype demonstration shows a commercial path for optical accelerators ready for several applications like network-edge processing, data-centers, and high-performance compute systems.”

Journal Reference:

- Mario Miscuglio, Zibo Hu, Shurui Li, Jonathan K. George, Roberto Capanna, Hamed Dalir, Philippe M. Bardet, Puneet Gupta, and Volker J. Sorger, “Massively parallel amplitude-only Fourier neural network,” Optica 7, 1812-1819 (2020). DOI: 10.1364/OPTICA.408659