MIT engineers have built up another virtual-reality training system called ‘Flight Goggles’ for drones that empowers a vehicle to “see” a rich, virtual condition while flying in an exhaust physical space. The system acts as a virtual testbed for any number of environments and conditions.

No doubt that training the drones to fly fast is like crash-inclined exercise that can have engineers repairing or supplanting vehicles with disappointing normality. The system could significantly reduce the number of crashes that drones experience in actual training sessions.

Sertac Karaman and his colleagues will present details of their virtual training system at the IEEE International Conference on Robotics and Automation next week. Co-authors include Thomas Sayre-McCord, Winter Guerra, Amado Antonini, Jasper Arneberg, Austin Brown, Guilherme Cavalheiro, Dave McCoy, Sebastian Quilter, Fabian Riether, Ezra Tal, Yunus Terzioglu, and Luca Carlone of MIT’s Laboratory for Information and Decision Systems, along with Yajun Fang of MIT’s Computer Science and Artificial Intelligence Laboratory, and Alex Gorodetsky of Sandia National Laboratories.

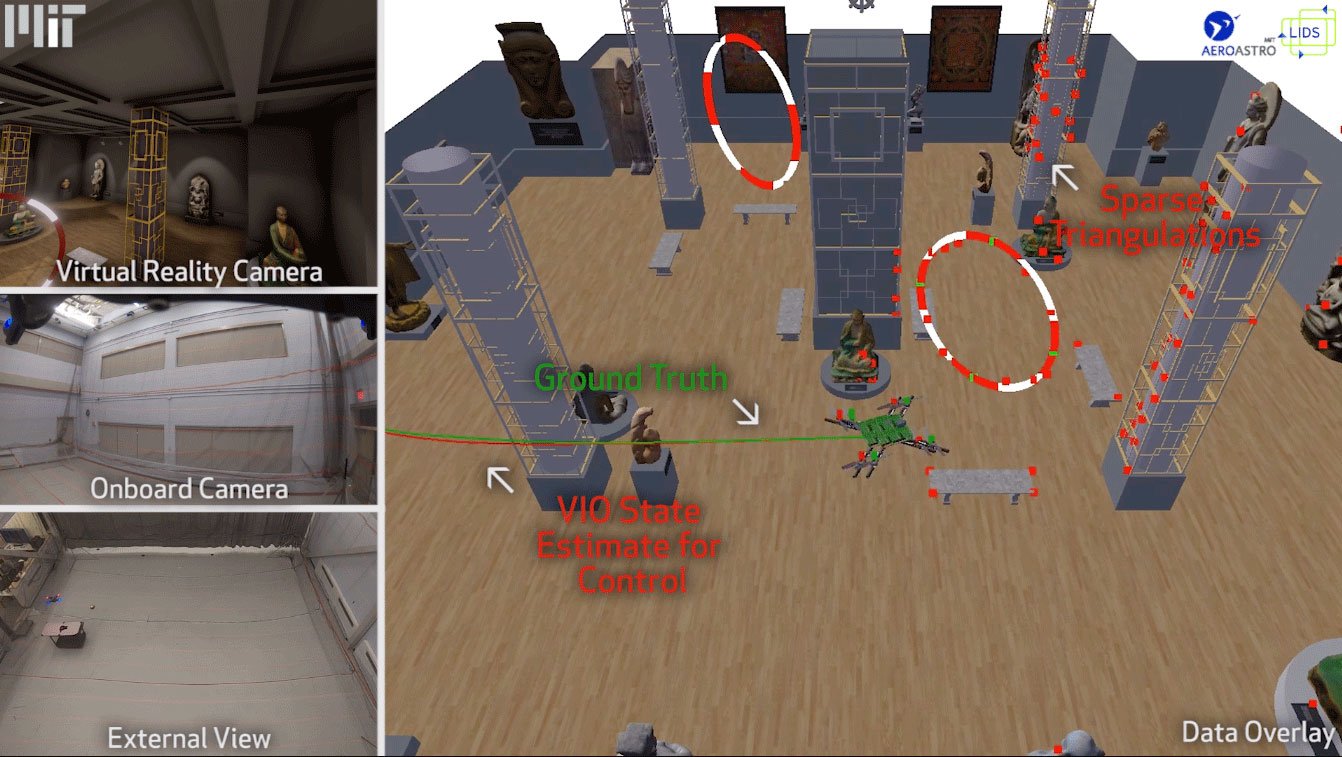

The system consists of a motion capture system, an image rendering program, and electronics that enable the team to quickly process images and transmit them to the drone.

Using image rendering program, scientists can draw up photorealistic scenes, such as a loft apartment or a living room, and beam these virtual images to the drone as it’s flying through the empty facility.

The virtual pictures can be prepared by the automaton at a rate of around 90 outlines for each second — around three times as quick as the human eye can see and process pictures. To empower this, the group custom-constructed circuit sheets that coordinate a capable inserted supercomputer, alongside an inertial estimation unit and a camera. They fit this equipment into a little, 3-D-printed nylon and carbon-fiber-fortified automaton outline.

The specialists completed an arrangement of examinations, incorporating one in which the drone figured out how to fly through a virtual window that is about twice of its size. The window was set inside a virtual lounge room.

As the automaton flew in the real, exhaust testing office, the scientists radiated pictures of the family room scene, from the automaton’s point of view, back to the vehicle. As the automaton flew through this virtual room, the analysts tuned a route calculation, empowering the automaton to learn on the fly.

Over 10 flights, the drone, flying at around 2.3 meters per second (5 miles per hour), successfully flew through the virtual window 361 times, only “crashing” into the window three times.

In a final test, the team set up an actual window in the test facility and turned on the drone’s onboard camera to enable it to see and process its actual surroundings. Using the navigation algorithm that the researchers tuned in the virtual system, the drone, over eight flights, was able to fly through the real window 119 times, only crashing or requiring human intervention six times.

It does the same thing in reality.

Karaman said, “We think this is a game-changer in the development of drone technology, for drones that go fast. If anything, the system can make autonomous vehicles more responsive, faster, and more efficient.”

“The system is highly malleable. For instance, researchers can pipe in their own scenes or layouts in which to train drones, including detailed, drone-mapped replicas of actual buildings — something the team is considering doing with MIT’s Stata Center. The training system may also be used to test out new sensors, or specifications for existing sensors, to see how they might handle on a fast-flying drone.”