Machine that interacts with real-world objects should infer properties about a 3D scene from observations of the 2D images it’s trained on. Scientists used neural networks for the same. However, these machine learning methods aren’t fast enough to make them feasible for many real-world applications.

Scientists at MIT and elsewhere have come up with a solution. They have created a new technique to represent 3D scenes from images about 15,000 times faster than some existing models.

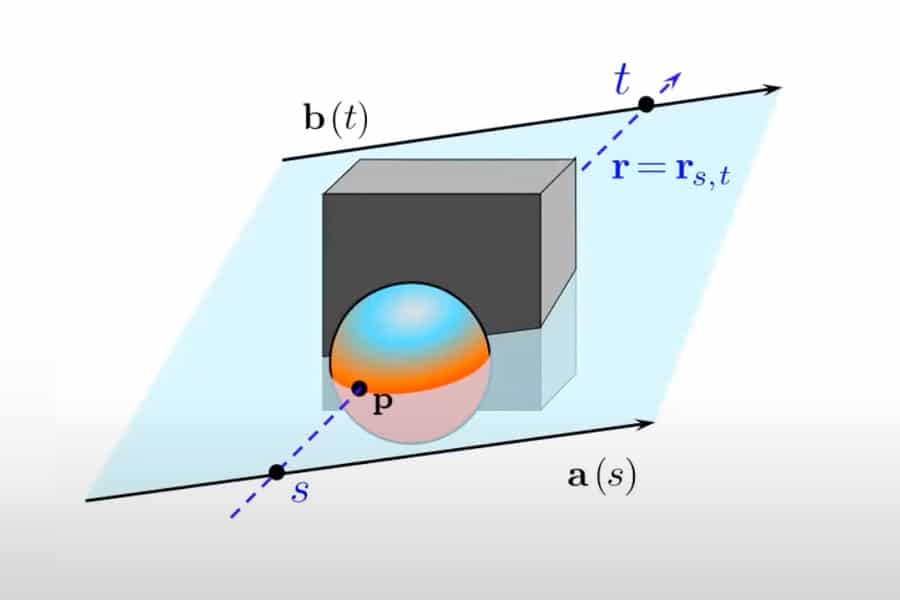

The new light-field networks (LFNs) represent a scene as a 360-degree light field. The light field function describes all the light rays in a 3D space, flowing through every point and in every direction.

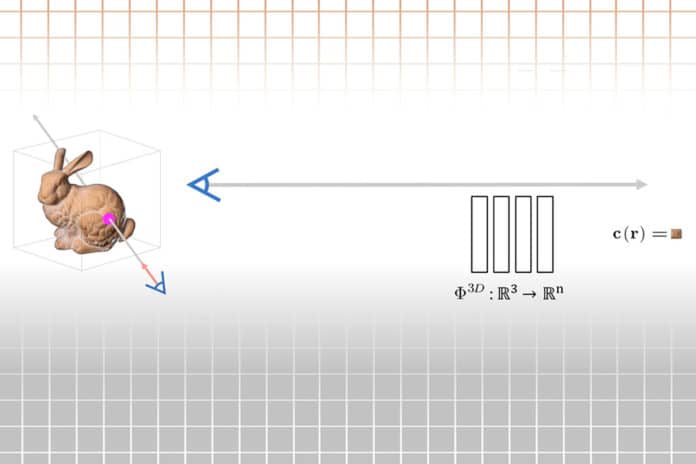

The technique reconstructs the light field after observing an image for once. Later, scientists can render 3D scenes at real-time frame rates. Encoding the light field into a neural network-enabled faster rendering of the underlying 3D scene from an image.

Vincent Sitzmann, a postdoc in the Computer Science and Artificial Intelligence Laboratory (CSAIL), said, “The big promise of these neural scene representations is to use them in vision tasks. I give you an image, and from that image, you create a representation of the scene, and then everything you want to reason about you do in the space of that 3D scene.”

The technique represents the light field of a 3D scene. It then directly maps each camera ray in the light field to the color observed by that ray. By implementing unique properties of light fields, the LFN renders a ray after only a single evaluation.

Sitzmann said, “With other methods when you do this rendering, you have to follow the ray until you find the surface. You have to do thousands of samples because that is what it means to find a surface. And you’re not even done yet because there may be complex things like transparency or reflections. With a light field, once you have reconstructed the light field, which is a complicated problem, rendering a single ray takes a single sample of the representation because the representation directly maps a ray to its color.”

The LFN arranges every camera ray utilizing its “Plücker facilitates.” The Plücker facilitates addressing a line in 3D space depending on its direction and distance from its starting place. The framework processes the Plücker coordinates of every camera ray where it hits a pixel to render an image.

The LFN computes the scene’s geometry by mapping each ray using Plücker coordinates. Parallax is the difference in the apparent position of an object when viewed from two different lines of sight. The LFN can tell the depth of objects in a scene due to parallax and uses this information to encode a scene’s geometry and appearance.

Reconstructing light fields requires neural networks to learn about the structures of light fields. Accordingly, scientists trained their models with several images of simple scenes of cars and chairs.

Co-lead author Semon Rezchikov, a postdoc at Harvard University, said, “There is an intrinsic geometry of light fields, which is what our model is trying to learn. You might worry that light fields of cars and chairs are so different that you can’t learn some commonality between them. But it turns out, if you add more kinds of objects, as long as there is some homogeneity, you get a better and better sense of how light fields of general objects look so that you can generalize about classes.”

During experiments, the LFNs could render scenes at more than 500 frames per second, about three orders of magnitude faster than other methods. As the technique is less memory-intensive, it requires only about 1.6 megabytes of storage instead of 146 megabytes for a popular baseline method.

Sitzmann said, “Light fields were proposed before, but back then, they were intractable. Now, with these techniques that we used in this paper, for the first time, you can both represent these light fields and work with these light fields. It is an interesting convergence of the mathematical models and the neural network models that we have developed coming together in this application of representing scenes so machines can reason about them.”

Scientists are now working to make their model more robust.

Journal Reference:

- Vincent Sitzmann, Semon Rezchikov, William T. Freeman, Joshua B. Tenenbaum, Fredo Durand et al. Light Field Networks: Neural Scene Representations with Single-Evaluation Rendering. arXiv:2106.02634