We all know that long-distance photography on the Earth- capturing enough light from a great distance is not easy. On top of that, the atmospheric distortions can ruin your image. And of course, the pollution is also there, which is again big trouble especially in cities. All these factors are the reason that makes it impossible to capture any image beyond a distance of a few kilometers.

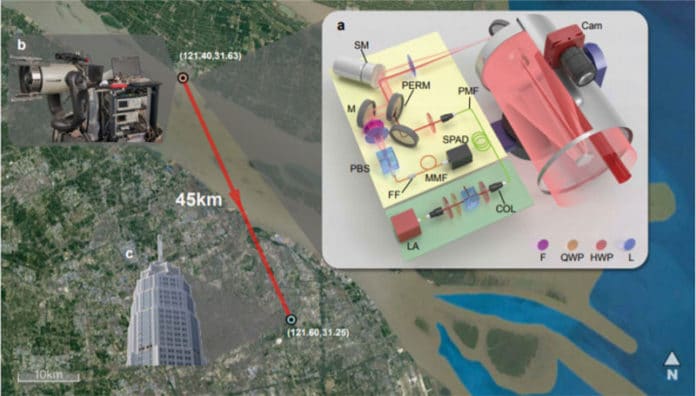

So, the researchers from the University of Science and Technology in Shanghai have developed the lidar-based system that can cut through city smog to resolve human-sized features at vast distances. The system can capture the subject at a distance of 45 kilometers (28 miles) even through thick pollution.

What is this new system?

The lidar-based camera system uses the sensors capable of detecting a single photon, together with an algorithm that ‘knits’ together sparse data points to create a high-resolution image. The new technique works similar to lidar, illuminating the subject with laser light and then creating an image from reflected light. That simply means the technique is able to record images even through the smog that’s a huge problem in densely populated cities.

For making the system even better in city areas, the team used an infrared laser with a wavelength of 1550 nanometres, a repetition rate of 100 kilohertz, and modest power of 120 milliwatts. This wavelength allows the team to filter out the solar photons and also make the system eye-safe.

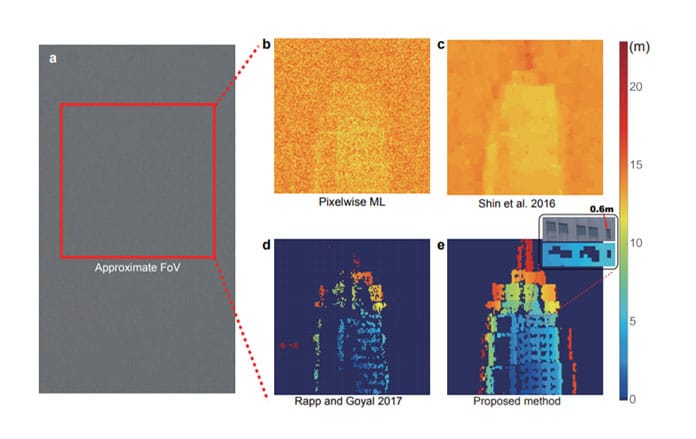

target taken with a standard astronomical camera. This photograph is substantially blurred due

to the inadequate spatial resolution and the air turbulence in the urban environment. The red

rectangle indicates the approximate LiDAR FoV. b–e, The reconstruction results obtained by

using the pixelwise maximum likelihood (ML) method, the photon-efficient algorithm by Shin

et al.23, the unmixing algorithm by Rapp and Goyal26, and the proposed algorithm, respectively.

The single-photon LiDAR recorded an average PPP of ∼2.59, and the SNR was ∼0.03. The

calculated relative depth for each individual pixel is given by the false color (see color scale

on right). Our algorithm performs much better than the other state-of-art photon-efficient computational algorithms and provides super-resolution sufficient to clearly resolve the 0.6-m-wide

windows (see expanded view in inset of panel e)

The team sends and receive the photons through the same optical apparatus. The reflected photons are then detected by a commercial single-photon detector. To create a 2D image, the researchers scan the field of view using a piezo-controlled mirror that can tilt up, down, and side to side. And by changing the gating timing, it can build a 3D image. This kind of computational imaging also allows the researchers to create images from relatively small sets of data.

For testing the system, they set up the new camera on the 20th floor of a building on Chongming Island in Shanghai and pointed it at the Pudong Civil Aviation Building across the river, some 45 km away.

Where we can use this system?

This lidar-based camera mechanism can be very important for an autonomous car, which depends on cameras to identify and predict the movement of vehicles and pedestrians, but it is useless because of severe pollution. The cities where vehicle automation could help improve air quality.

In addition, most of the automakers around the world are planning fleets of autonomous taxis, which would reduce the need for privately owned cars, and therefore cut pollution on city streets.

Journal Reference

- Single-photon computational 3D imaging at 45 km. DOI: 10.1364/PRJ.390091