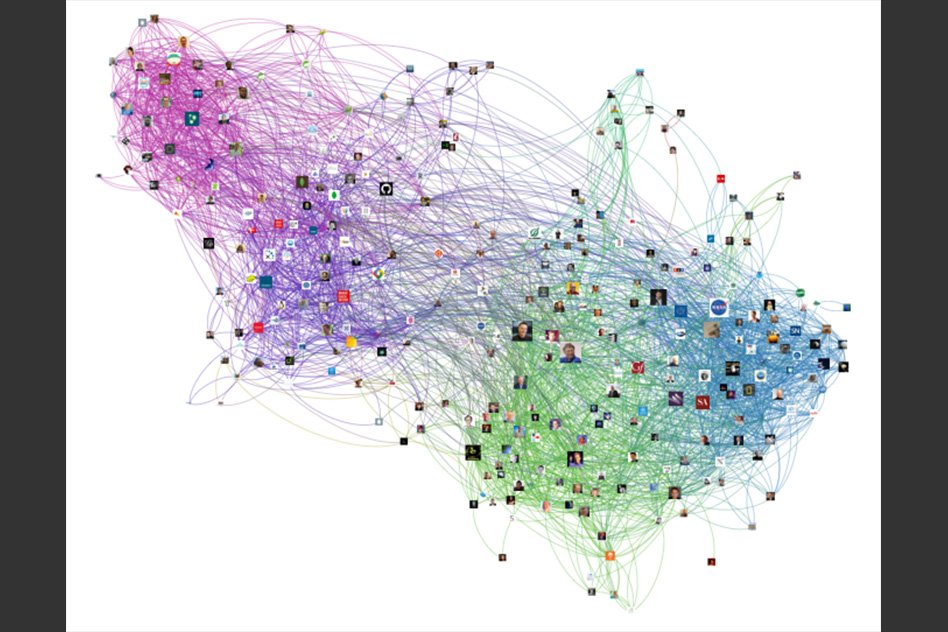

In the data structure, graphs are structures of nodes and connecting lines that shows map scores of complex data relationships. Studying graphs is essential for applications including ranking web pages, analyzing social networks for political insights, or plotting neuron structures in the brain.

Sometimes graph can reach terabytes in size. In such cases, they need to be processed in expensive dynamic random access memory (DRAM) across multiple power-hungry servers.

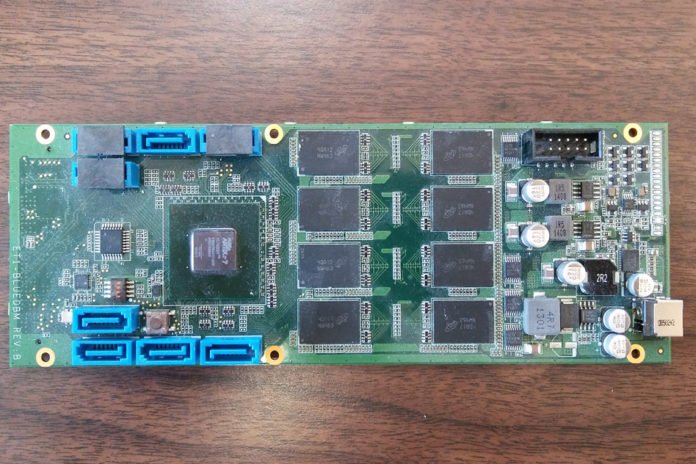

Now, MIT scientists have developed a device that uses cheap flash storage to process massive graphs using only a single personal computer. The device consists of a flash chip array and computation “accelerator,” that helps flash achieves DRAM-like performance.

The algorithm used in the device sorts all access requests for graph data into a sequential order that flash can access quickly and easily. It then combines few requests to lessen the overhead — the consolidated processing time, memory, bandwidth, and other computing assets — of sorting.

Scientists tested their device by adding the massive Web Data Commons Hyperlink Graph, which has 3.5 billion nodes and 128 billion connecting lines. Using two of their devices, they found that they could process massive graphs — up to 4 billion nodes and 128 billion connecting lines.

Sang-Woo Jun, a CSAIL graduate student and first author on a paper describing the device said, “The bottom line is that we can maintain performance with much smaller, fewer, and cooler — as in temperature and power consumption — machines.”

Co-author Arvind, the Johnson Professor in Computer Science Engineering said, “Graph processing is such a general idea. What does page ranking have in common with gene detection? For us, it’s the same computation problem — just different graphs with different meanings. The type of application someone develops will determine the impact it has on society.”

The device basically works on a “sort-reduce” algorithm which uses flash as the primary storage source: waste. The algorithm takes all direct access requests and sorts them in sequentially by identifiers, which demonstrate the goal of the request- for example, grouping together all updates for node A, all for node B, and so on. Flash would then be able to access kilobyte-sized chunks of thousands of requests, making it undeniably productive.

In order to save processing power and bandwidth, the algorithm characterizes the data into different small groups. Whenever the algorithm notes matching identifiers, it sums those into a single data packet — such as A1 and A2 becoming A3. It continues doing so, creating increasingly smaller packets of data with matching identifiers, until it produces the smallest possible packet to sort. This drastically reduces the number of duplicate requests to access.

The device is also composed of an accelerator that acts as a midway point between the host and flash chips, executing all computation for the algorithm.

Arvind said, “Accelerators are supposed to help the host computer, but we’ve come so far [with the computations] that the host becomes unimportant.”

Keshav Pingali, a professor of computer science at the University of Texas at Austin said, “The MIT work shows a new way to perform analytics on very large graphs: Their work exploits flash memory to store the graphs and exploits ‘field-programmable gate arrays’ [custom integrated circuits] in an ingenious way to perform both the analytics and the data processing required to use flash memory effectively.”

The paper is being presented at the International Symposium on Computer Architecture (ISCA).