Imagine, a robot leaving a written note for you at the workplace or drawing a diagram for explaining some concept! Now, it seems such imagination or scenario has come a step closer to reality.

A team of computer scientists at Brown University has created an algorithm that enables a robot to copy what we write, and what we draw. This development could open the line of communication between robots and human colleagues and collaborators.

The algorithm helps the robot to put pen to paper, writing words using stroke patterns similar to human handwriting.

How does the algorithm work?

Using the deep learning networks which analyze the handwritten words or sketches, the algorithm can interpret the probable pen strokes used to create them. Based on that information, the algorithm mimics the pen strokes that imitate words and sketches.

The algorithm uses two different models of the image it is trying to replicate. The first one is a global model that considers the images as a whole. Using this model the algorithm identifies a likely starting point for making the first stroke. Once the stroke has begun, the algorithm looks at the second image pixel by pixel model to determine where that stroke should go and how long it should be. And as the stroke is completed the algorithm again references the global model, starting the process over again until the image is completed.

“Just by looking at a target image of a word or sketch, the robot can reproduce each stroke as one continuous action,” said Atsunobu Kotani, an undergraduate student at Brown who led the algorithm’s development. “That makes it hard for people to distinguish if it was written by the robot or actually written by a human.”

Algorithm Testing:

For demonstrating their work on the algorithm, the team enabled a robot to write the word “hello,” which was written by several people in 10 different languages, that employed different characters. The languages included Greek, Hindi, Urdu, Chinese, and Yiddish. The robot’s ability to do so was a little surprising, as the algorithm had initially been trained only using Japanese characters.

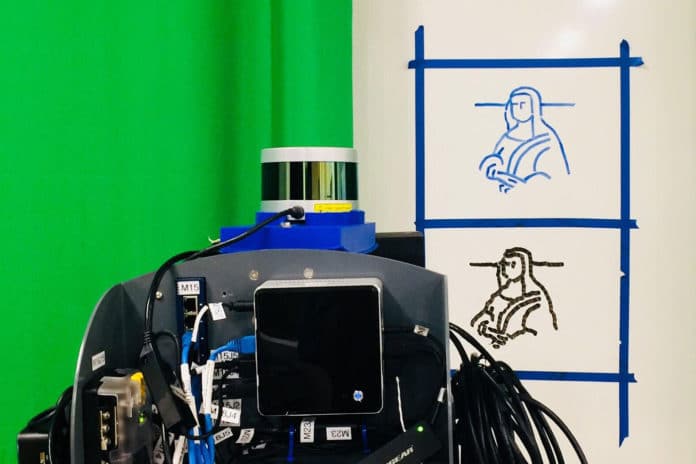

In other words, with the help of a pen in its hand, the robot was able to reproduce various words that it hadn’t previously seen. Additionally, the robot also reproduced some rough line sketches, including one of the Mona Lisa.

“I want a robot to be able to do everything a person can do,” said Stefanie Tellex, an assistant professor of computer science at Brown. “I’m particularly interested in a robot that can use language. Writing is a way that people use language, so we thought we should try this.”

“A lot of the existing work in this area requires the robot to have information about the stroke order in advance,” Tellex said. “If you wanted the robot to write something, somebody would have to program the stroke orders each time. With what Atsu has done, you can draw whatever you want and the robot can reproduce it. It doesn’t always do the perfect stroke order, but it gets pretty close.”

Journal Reference

- Atsunobu Kotani, Stefanie Tellex; Teaching Robots To Draw. IEEE DOI: 10.1109/ICRA.2019.8793484