Thought about a subset of AI, machine learning and neural systems are quite the cutting edge. Rather than programming a computer to complete each task it needs to do, the reasoning behind machine learning is to prepare ground-or space-based computer processors with calculations that can learn from data, finding and recognizing patterns and trends, but faster, more accurately, and without bias.

Specialists at NASA‘s Goddard Space Flight Center in Greenbelt, Maryland, thought researchers and engineers could be profited by a similar technology, regularly alluded to as machine learning or neural systems.

With funding from several NASA research programs, including the Earth Science Technology Office, or ESTO, Goddard engineers and scientists are researching some of those applications individually or in partnerships with academia and private industry. Their projects run the gamut, everything from how machine learning could help in making real-time crop forecasts or locating wildfires and floods to identifying instrument anomalies and even suitable landing sites for a robotic craft.

Since joining Goddard a couple years ago, James MacKinnon, a Goddard computer engineer has emerged as one of the technology’s most fervent champions. One of the principal ventures he handled included teaching algorithms how to distinguish wildfires spreading utilizing remote-sensing pictures gathered by the Terra spacecraft’s Moderate Resolution Imaging Spectroradiometer instrument.

Credits: NASA

His neural system precisely identified flames 99 percent of the time. He has since extended the examination to incorporate information assembled by the Joint Polar Satellite System’s Visible Infrared Imaging Radiometer Suite.

MacKinnon is also dreaming to ultimately deploy a constellation of CubeSats, all equipped with machine-learning algorithms embedded within sensors. With such a capacity, the sensors could distinguish wildfires and send the data back to Earth progressively, giving firefighters and others breakthrough data that could significantly enhance firefighting efforts.

He is also developing machine-learning techniques to identify single-event upsets in spaceborne electronic devices, which can result in data anomalies, and compiling a library of machine-learning computer models, dataset-generation tools, and visualization aids to make it easier for others to use machine-learning techniques for their missions.

MacKinnon said, “A huge chunk of my time has been spent convincing scientists that these are valid methods for analyzing the massive amounts of data we generate.”

Goddard researcher Matt McGill doesn’t require persuading. A specialist in lidar strategies to measure clouds and the modest particles that make up haze, dust, air pollutants, and smoke, McGill is partnering together with Slingshot Aerospace. This California-based organization is creating stages that draw information from numerous kinds of sensors and utilize machine-learning calculations to remove data.

Under the ESTO-funded effort, McGill is providing Slingshot with data he gathered with the Cloud-Aerosol Transport System, or CATS, instrument, which retired late last year after spending 33 months aboard the International Space Station. There, CATS measured the vertical structure of clouds and aerosols, which occur naturally during volcanic eruptions and dust storms or anthropogenically through the burning of oil, coal, and wood.

A Slingshot-developed machine-learning algorithm is ingesting that data so that it can learn and ultimately begin to recognize patterns, trends, and occurrences that are difficult to capture with standardized processing algorithms.

McGill said, “The idea is that algorithms, once trained, can recognize signals in hours rather than days.”

“While CATS was roughly the size of a refrigerator, future systems must be much smaller, capable of flying on a constellation of SmallSats to collect simultaneous, multipoint measurements. However, as instruments get smaller, the data can potentially be noisier due to smaller collection apertures. We have to get smarter in how we analyze our data and we need to develop the capability to generate true real-time data products.”

Credits: iStock

Getting smarter in data analysis is also driving Goddard heliophysicist Antti Pulkkinen and engineer Ron Zellar. For example, he started detecting whether solar storms were causing otherwise healthy whales, dolphins, and porpoises — collectively known as cetaceans — to strand along coastal areas worldwide. He found no correlation, but they did find a link between stranding events in Cape Cod, Massachusetts, and wind strength.

Zellar said, “We can’t assume a causal relationship. That’s what we’re trying to find.”

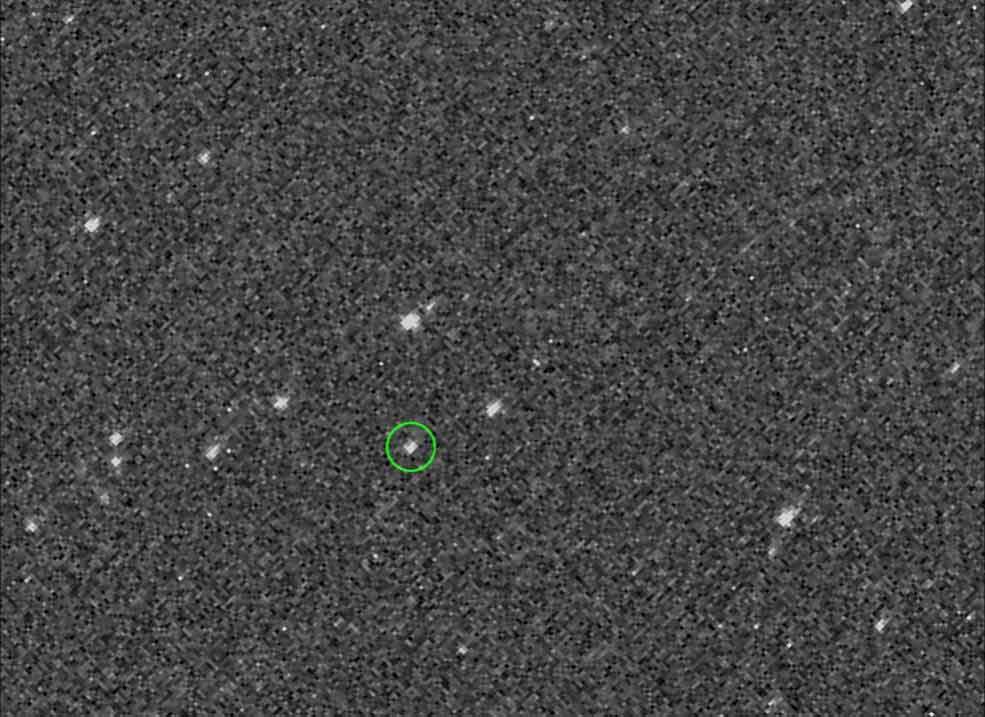

In November, the OSIRIS-REx mission is planned to start a progression of complex moves that take the specialty closer to asteroid Bennu with the goal that it can start describing the body and snapping pictures that will illuminate the best area for gathering a sample and returning it to Earth for examination. This will require a large number of high-goals pictures taken from various points and after that handled physically by a group of specialists on the ground.

Under a NASA-funded research effort involving Goddard scientists, Dante Lauretta, a University of Arizona professor and OSIRIS-REx principal investigator, and Chris Adami, a machine-learning expert at Michigan State University, a team is investigating the potential of networked algorithms. The goal is to teach onboard sensors to process images and determine an asteroid’s shape and features — information needed to autonomously navigate in and around an asteroid and make decisions on where to safely acquire samples.

Credits: NASA/ University of Arizona

Bill Cutlip, a Goddard senior business development manager and team member said, “The point is to cut the computational umbilical cord back to Earth. What we’re trying to do is train an algorithm to understand what it’s seeing, mimicking how the human brain processes information.”

“Such a capability not only would benefit future missions to asteroids, but also those to Mars and the icy moons of Jupiter and Saturn. With advances in field-programmable gate arrays or circuits that can be programmed to perform a specific task and graphics-processing units, the potential is staggering.”