Autonomous vehicles largely depend on light-based image sensors to see through blind conditions. But those sensors often struggle in some conditions like heavy rain, fog. Now, MIT scientists have developed a sub-terahertz-radiation receiving system to provide electric eyesight to autonomous vehicles.

Sub-terahertz wavelengths can easily detect through fog and dust clouds. It works by sending an initial signal through a transmitter, a receiver, and measures the absorption and reflection of the rebounding sub-terahertz wavelength. That sends a signal to a processor that recreates an image of the object.

Be that as it may, implementing sub-terahertz sensors into driverless vehicles is challenging. Delicate, exact item acknowledgment requires a solid yield baseband motion from a beneficiary to the processor. In a new study, scientists describe a two-dimensional, sub-terahertz receiving array on a chip that’s orders of magnitude more sensitive, meaning it can better capture and interpret sub-terahertz wavelengths in the presence of a lot of signal noise.

To accomplish this, they executed a plan of free signal-mixing pixels — called “heterodyne detectors’— that are typically difficult to integrate into chips. The analysts radically shrank the extent of the heterodyne locators such huge numbers of them can fit into a chip. The trap was to make a reduced, multipurpose part that can all the while down-blend input signs, synchronize the pixel array and produce solid yield baseband signals.

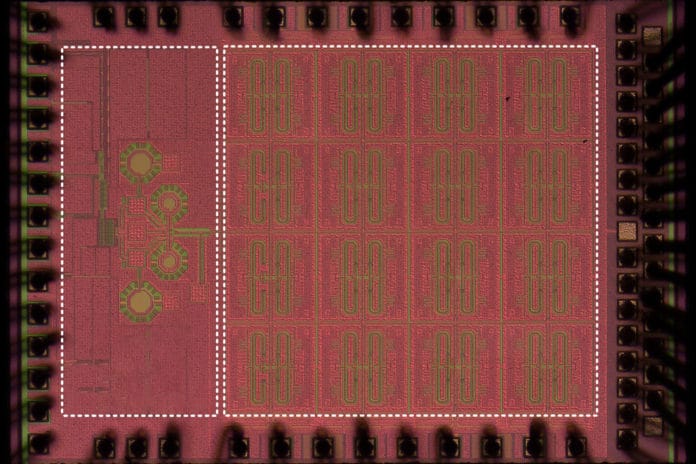

The analysts assembled a prototype, which has a 32-pixel exhibit incorporated on a 1.2-square-millimeter gadget. The pixels are around multiple times more touchy than the pixels in today’s best on-chip sub-terahertz array sensors. With somewhat more advancement, the chip could possibly be utilized in driverless vehicles and self-governing robots.

The key to the design is what the researchers call “decentralization.” In this design, a single pixel — called a “heterodyne” pixel — generates the frequency beat (the frequency difference between two incoming sub-terahertz signals) and the “local oscillation,” an electrical signal that changes the frequency of an input frequency. This “down-mixing” process produces a signal in the megahertz range that can be easily interpreted by a baseband processor.

The output signal can be used to calculate the distance of objects, similar to how LiDAR calculates the time it takes a laser to hit an object and rebound. In addition, combining the output signals of an array of pixels, and steering the pixels in a certain direction, can enable high-resolution images of a scene. This allows for not only the detection but also the recognition of objects, which is critical in autonomous vehicles and robots.

Co-author Ruonan Han, an associate professor of electrical engineering and computer science said, “A big motivation for this work is having better ‘electric eyes’ for autonomous vehicles and drones. Our low-cost, on-chip sub-terahertz sensors will play a complementary role to LiDAR for when the environment is rough.”

The paper is published online on Feb. 8 by the IEEE Journal of Solid-State Circuits.