MIT scientists had done with a multi-university project that involves the development of intelligence at the Center for Brains, Minds, and Machines, to explain and replicate human intelligence.

By building such systems, that start to inexact these limits, the scientists trust they can help answer inquiries regarding what data preparing assets individuals use at what phases of improvement. Moreover, the analysts may likewise produce a few experiences valuable for mechanical vision frameworks.

To build the system, scientists look at the major subjective capacities that a wise specialist requires exploring the world: predict objects’ responses and inducing how they react to physical powers.

Josh Tenenbaum, a professor of brain and cognitive sciences at MIT said, “The common theme here is really learning to perceive physics. That starts with seeing the full 3-D shapes of objects, and multiple objects in a scene, along with their physical properties, like mass and friction, then reasoning about how these objects will move over time. Jiajun’s four papers address this whole space. Taken together, we’re starting to be able to build machines that capture more and more of people’s basic understanding of the physical world.”

Presenting their work at this year’s Conference on Neural Information Processing Systems, Tenenbaum and one of his students, Jiajun Wu, are co-authors on four papers. The 1st, 2nd and 3rd papers deal with inferring information about the physical structure of objects, from both visual and aural data. The fourth deals with predicting how objects will behave on the basis of that data.

Something else that unites all four papers is their unusual approach to machine learning, a technique in which computers learn to perform computational tasks by analyzing huge sets of training data.

In a run of the mill machine-learning framework, the preparation information is marked: Human examiners will have recognized the articles in a visual scene or interpreted the expressions of a talked sentence. The framework endeavors to realize what highlights of the information related to what marks, and it’s judged on how well it names beforehand inconspicuous information.

The system is prepared to derive a physical model of the world — the 3-D states of items that are for the most part avoided seeing for example. In any case, at that point it works in reverse, utilizing the model to resynthesize the info information, and its execution is judged on how well the remade information coordinates the first information.

In actual, the system relies on the influential theories of the MIT neuroscientist David Marr, who died in 1980 at the tragically young age of 35. Marr hypothesized that in interpreting a visual scene, the brain first creates what he called a 2.5-D sketch of the objects it contained — a representation of just those surfaces of the objects facing the viewer.

Wu and his partners’ framework should be prepared on information that incorporates both visual pictures and 3-D models of the items the pictures delineate. Developing exact 3-D models of the articles delineated in genuine photos would be restrictively tedious, so at first, the scientists prepare their framework utilizing manufactured information, in which the visual picture is created from the 3-D demonstrate, as opposed to the other way around. The way toward making the information resembles that of making a PC enlivened film.

Once the framework has been prepared on engineered information, be that as it may, it can be calibrated utilizing genuine information. That is on account of its definitive execution model is the exactness with which it reproduces the info information. It’s as yet fabricating 3-D models, yet they don’t should be contrasted with human-built models for execution appraisal.

Scientists used intersection over union to outperform its predecessors. Be that as it may, a given crossing point over-union score leaves a great deal of space for nearby variety in the smoothness and state of a 3-D to demonstrate. So scientists additionally led a subjective investigation of the models’ loyalty to the source pictures. Of the investigation’s members, 74 percent favored the new framework’s reproductions to those of its forerunners.

They train a system to analyze audio recordings of an object being dropped, to infer properties such as the object’s shape, its composition, and the height from which it fell. Again, the system is trained to produce an abstract representation of the object, which, in turn, it uses to synthesize the sound the object would make when dropped from a particular height. The system’s performance is judged on the similarity between the synthesized sound and the source sound.

Hence, the system begins to model humans’ intuitive understanding of the physical forces acting on objects in the world. The paper gets where the past papers leave off: It accepts that the framework has just concluded articles’ 3-D shapes.

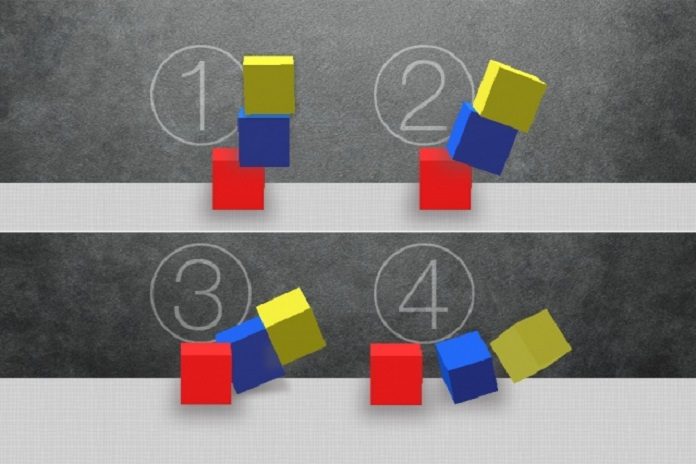

Those shapes are basic: balls and 3D shapes. The analysts prepared their framework to perform two undertakings. The first is to assess the speeds of balls going on a billiard table and, on that premise, to foresee how they will act after a crash. The second is to examine a static picture of stacked 3D shapes and decide if they will fall and, assuming this is, where the blocks will arrive.

The system first learns to describe input data in that language. It then feeds that description to something called a physics engine, which models the physical forces acting on the represented objects. Physics engines are a staple of both computer animation, where they generate the movement of clothing, falling objects, and the like, and of scientific computing, where they’re used for large-scale physical simulations.

In tests, the researchers’ system again outperformed its predecessors. In fact, in some of the tests involving billiard balls, it frequently outperformed human observers as well.