Scientists at the University of California, San Diego and the University of Toronto have developed a computer that detects how much pain you’re feeling actually. It just examines tiny facial expressions and then calibrates them into the system to each person.

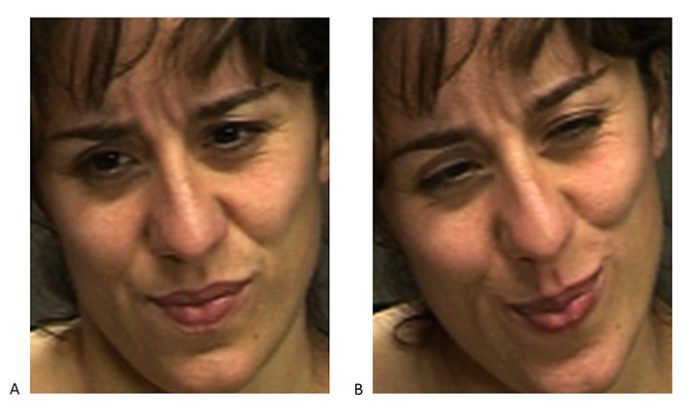

If you think that you may fool this system by putting a false expression on the face, then you are wrong. This system called ‘DeepFaceLift’ managed to detect distinctive dynamic features of facial expressions that people missed.

Jeffrey Cohn at the University of Pittsburgh said, “These metrics might be useful in determining real pain from faked pain. The system could make the difference between prescribing potentially addictive painkillers and catching out a faker.”

Painkillers are highly addictive and potentially dangerous. If doctors have a reliable way to determine which patients truly need the medications, they can worry less about the knotty problem of determining who’s lying and who’s being honest about their pain.

To measure the pain levels is a tricky task. Scientists trained the algorithm on videos of people wincing and grimacing in pain. Each video consisted of a person with shoulder pain, who had been asked to perform a different movement and then rate their pain levels. The result was an algorithm that can use subtle differences in facial expressions.

Dianbo Liu, who created the system with his colleagues said, “Certain parts of the face are particularly revealing. Large amounts of movement around the nose and mouth tended to suggest higher self-reported pain scores.”

After testing the computer, scientists found that this system could weed out fakers 85 % of the time. For more accuracy, the system can be tweaked to take into account someone’s age, gender and skin complexion. Although, age usually has the most impact on their expression of pain levels.

The more fascinating, this pain recognizing algorithm can be tweaked to give personalized results based on age, sex, and skin complexion. Currently, it is just a prototype. Further improvements will explore whether over-regularity is a general feature of fake expressions.

Marian Barlett said, “As with causes of pain, these scenarios also generate strong emotions, along with attempts to minimize, mask, and fake such emotions, which may involve ‘dual control’ of the face. In addition, our computer-vision system can be applied to detect states in which the human face may provide important clues as to health, physiology, emotion, or thought, such as drivers’ expressions of sleepiness, students’ expressions of attention and comprehension of lectures, or responses to treatment of affective disorders.”

“By revealing the dynamics of facial action through machine vision systems. Our approach has the potential to elucidate ‘behavioral fingerprints’ of the neural-control systems involved in emotional signaling.”