What if there is an invisible button placed within your thumb and index fingers– you can press it by tapping your fingers together. Imagine a Virtual Dial you turn by rubbing your thumb against your index finger. Imagine grabbing and pulling a Virtual Slider in thin air. Doesn’t it sound amazing? Google is developing such kinds of interactions. Google ATAP is working on a new sensing technology called Project Soli.

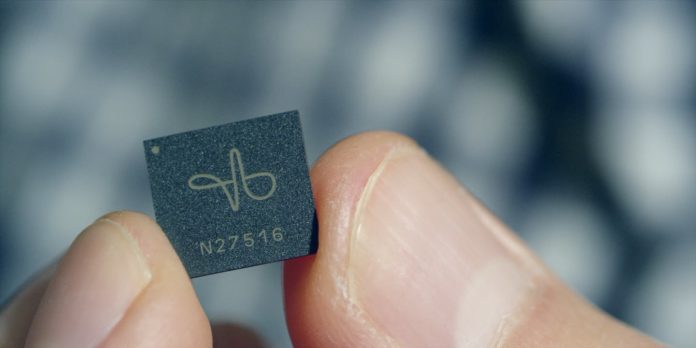

Project Soli is a new sensor technology that uses small radar to detect touchless gesture interactions. It can track sub-millimeter motion at high speed with great accuracy.

Developers said, “We’re creating a ubiquitous gesture interaction language that will allow people to control devices with a simple, universal set of gestures. In this technology, the human hand becomes a universal input device for interacting with technology.”

Virtual tools are the main concept behind the project Soli. Developers believe that it could effectively communicate, learn, and remember interactions.

Related: Google’s Project Wycheproof: A Set Of Security Tests

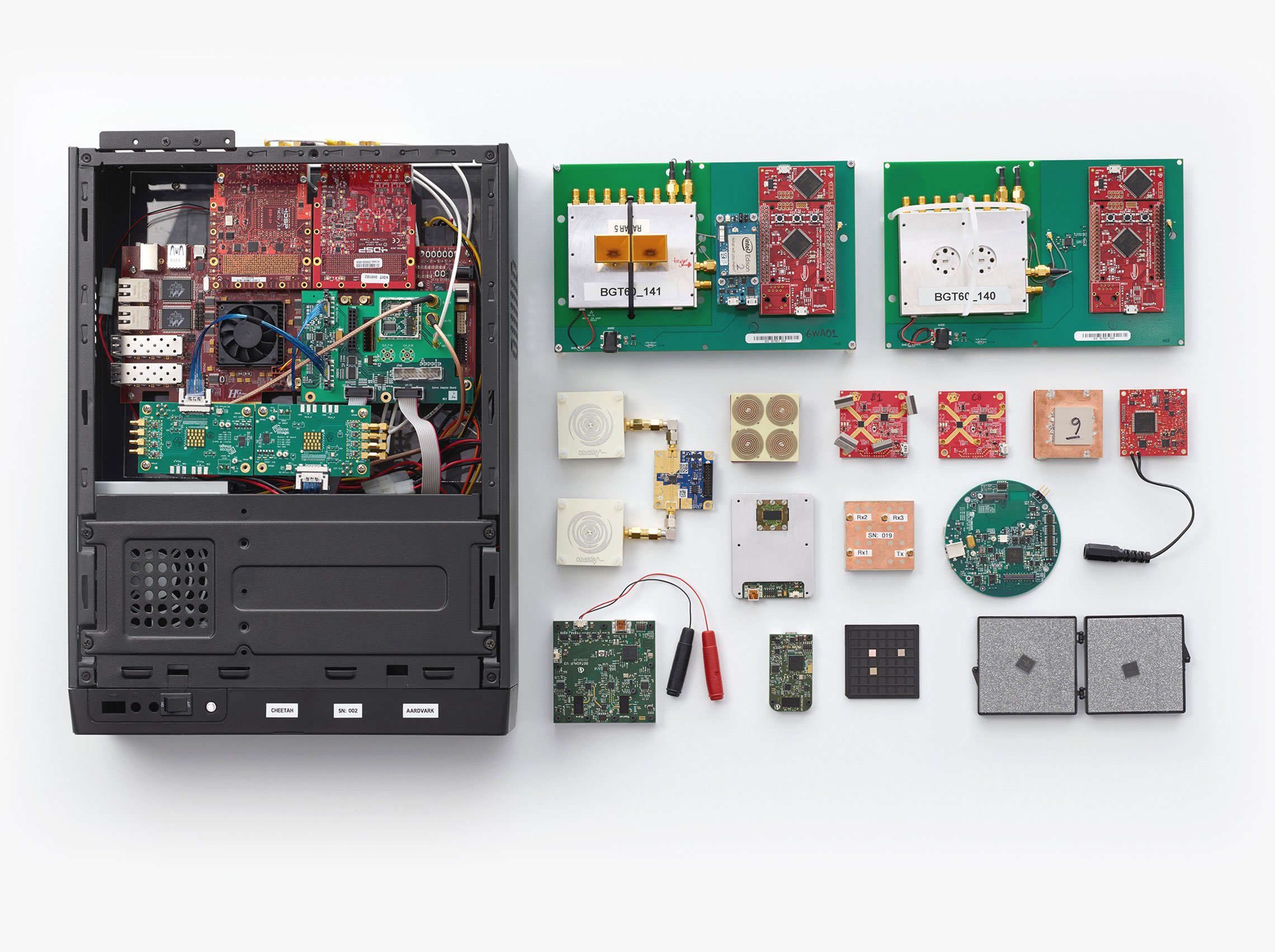

The project Soli’s software consists of a generalized gesture recognition pipeline. This pipeline is hardware that can work with different types of radar. When the pipeline implements several stages of signal abstraction, the core and abstract machine learning features interpret gesture controls.

Its SDK enables developers to access and build upon our gesture recognition pipeline easily. Through the SDK, it extracts real-time signals from radar hardware, outputting signal transformations.

Developers noted, “This new sensing technology is a fully integrated, low-power radar operating in the 60-GHz ISM band. Additionally, it can reduce the complexity of radar system design and power consumption compared to our initial prototypes.”

“We have developed two modulation architectures: a Frequency Modulated Continuous Wave (FMCW) radar and a Direct-Sequence Spread Spectrum (DSSS) radar. Both integrate the entire radar system into the package, including multiple beamforming antennas that enable 3D tracking and imaging with no moving parts,” they added.

Working on this new sensing technology project, Soli:

Soli sensor works by emitting electromagnetic waves in a broad beam. The beam reflects some part of an object back towards the radar antenna.

Related: Google’s New Tool Uses AI to Combat Online Trolls

It tracks and discovers dynamic gestures expressed by fine motions of the fingers and hand. Unlike traditional radar sensors, it does not need large bandwidth and high spatial resolution.

In reality, its fundamental sensing principles depend on motion resolution by extracting subtle changes in the received signal over time.

By processing these temporal signal variations, it can find complex finger movements and deform hand shapes within its field.

According to scientists, the project Soli has the potential to be integrated with wearable, phones, computers, cars, and IoT devices in our environment.